[ad_1]

TL; DR: Text Query -> LLM -> Intermediate Representation (such as Image Layout) -> Stable Diffusion -> Image.

Recent advances in text-to-image generation with diffusion models have yielded excellent results by synthesizing highly realistic and diverse images. However, despite their impressive capabilities, diffusion models such as steady-state diffusion often struggle to meet the demands accurately when spatial or common sense reasoning is required.

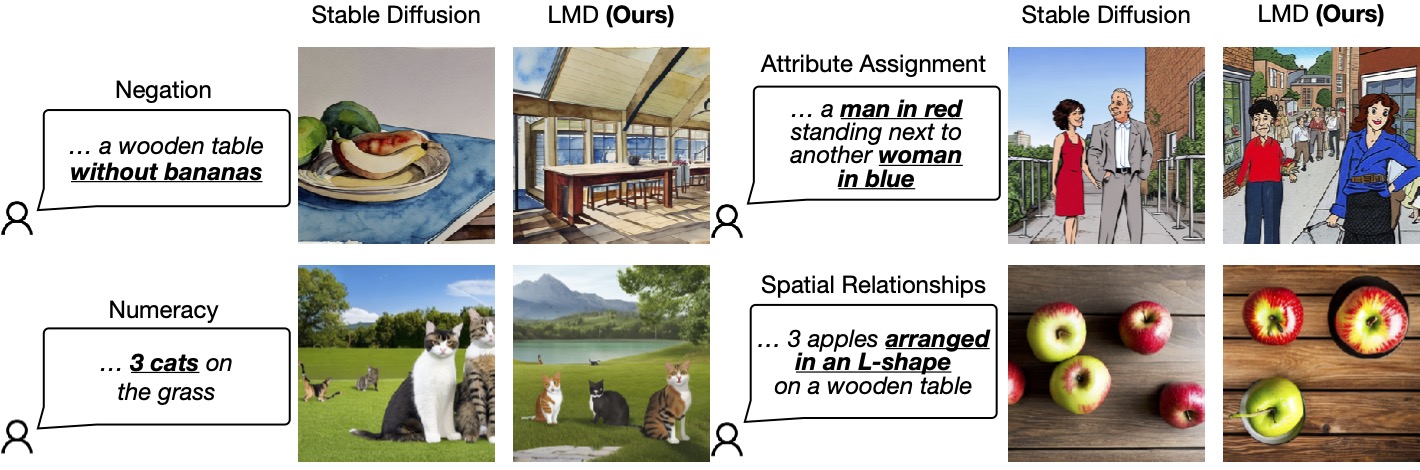

The figure below lists four scenarios in which steady diffusion fails to generate images that exactly match the given requirements, namely denial, Numeracyand attribute assignment, Spatial relations. In contrast, our method LLM– argumented dinfusion (LMD), provides much better rapid understanding of text-to-image generation in these scenarios.

Figure 1: LLM-Based Diffusion Enhances Rapid Understanding of Text-to-Image Diffusion Models.

One possible solution to this issue is, of course, to collect large multimodal datasets that include complex labels and train a large diffusion model by encoding a large language. This approach has significant costs: it is time-consuming and expensive to train both large language models (LLMs) and diffusion models.

Our solution

To solve this problem efficiently with minimal cost (i.e. no training), we instead Equipping Diffusion Models with Enhanced Spatial and Common Sense Reasoning Using Shelf-Frozen LLMs In the new two-stage generation process.

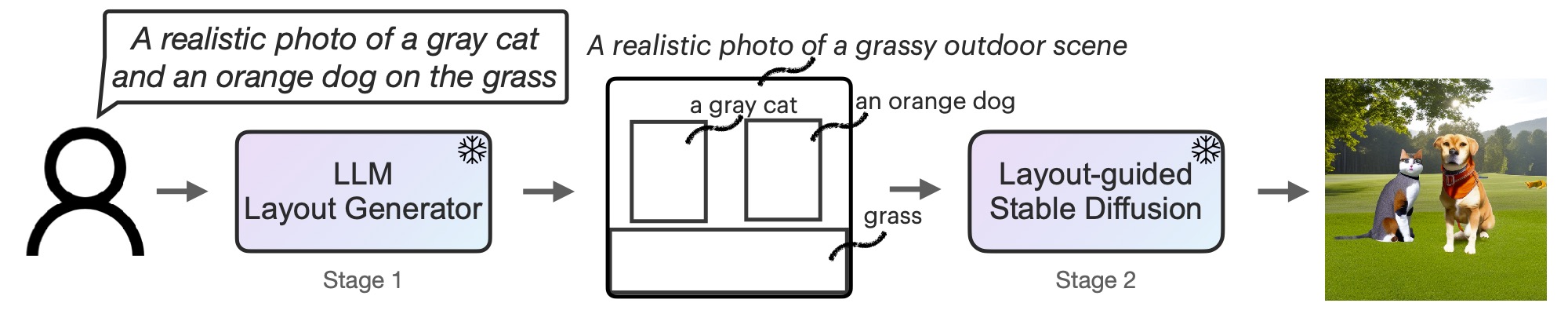

First, we adapt LLM to be a text-driven layout generator through contextual learning. When given an image request, LLM outputs the scene layout as bounding boxes, along with the corresponding individual descriptions. Second, we run the diffusion model with a new controller to generate layout-driven images. Both stages use frozen pretrained models without optimization of LLM or diffusion model parameters. Readers are invited to read the paper on arXiv for more details.

Figure 2: LMD is a text-to-image generation model with a novel two-step generation process: text-to-layout generator LLM + context learning and new layout-driven stable diffusion. Both stages are without training.

Additional features of LMD

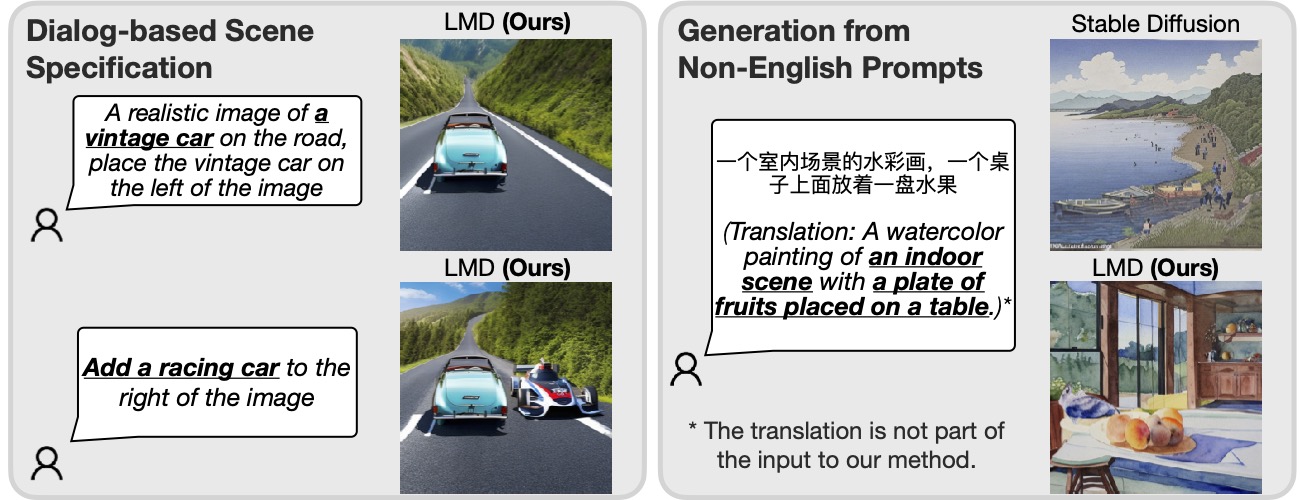

In addition, LMD naturally allows A dialogue-based versatile scene specification, which allows for additional clarifications and further modifications for each request. In addition, LMD can To deal with the demand for a language that is not well supported by the basic diffusion model.

Figure 3: By enabling LLM for fast comprehension, our method can perform dialogue-based scene specification and generation in a language (Chinese in the example above) that the basic diffusion model does not support.

Given an LLM that supports a multi-round dialog (eg, GPT-3.5 or GPT-4), the LMD allows the user to provide additional information or explanations to the LLM by asking the LLM in the dialog after the first layout has been generated and images created. Updated layout in post-LLM reply. For example, the user can request to add an object to the scene or change existing objects in location or description (left half of Figure 3).

Additionally, by providing an example of a non-English query with a layout and background description in English during contextual learning, LMD accepts non-English query input and generates a layout, field description and background in English afterwards. layout-image generation. As shown in the right half of Figure 3, this allows the generation of a request in a language that is not supported by mainstream diffusion models.

visualizations

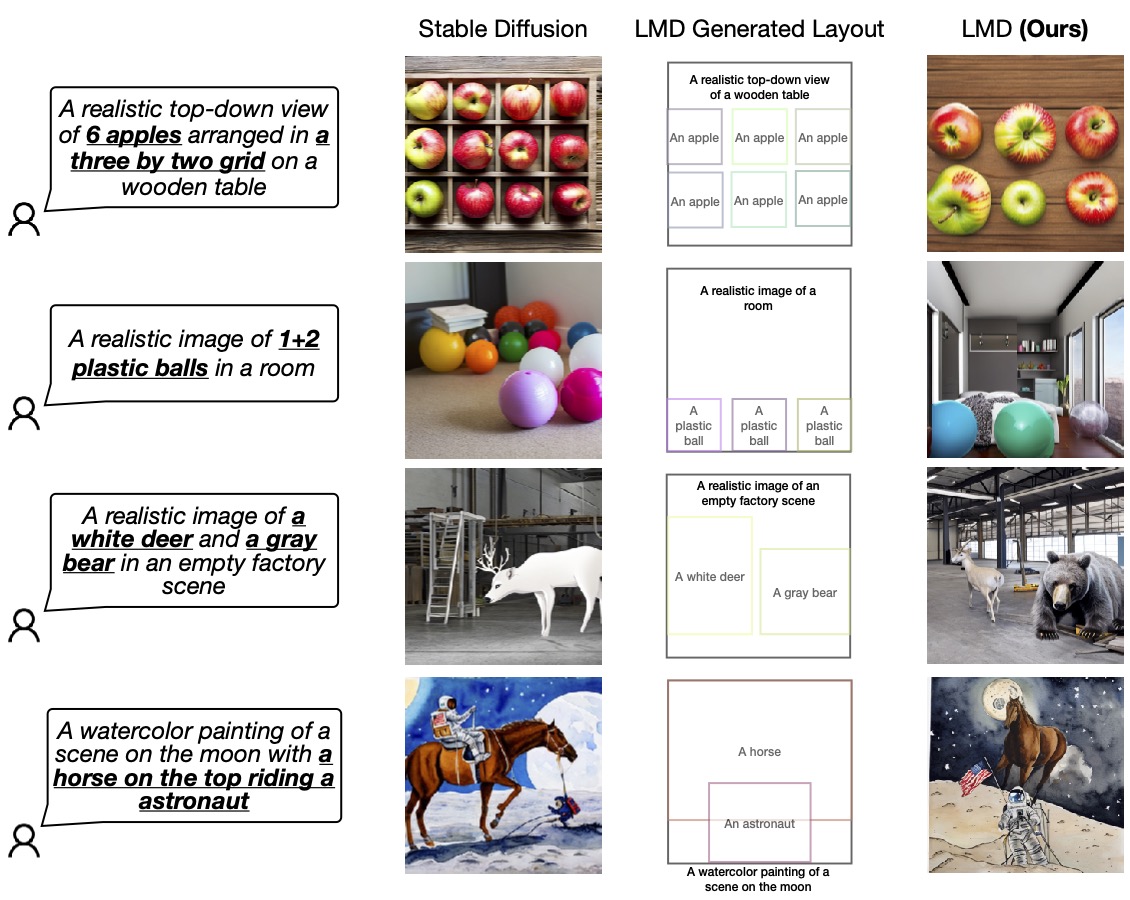

We demonstrate the superiority of our design over the base diffusion model (SD 2.1) used by LMD under the hood. Readers are invited to further evaluate and compare our work.

Figure 4: LMD outperforms the baseline diffusion model by accurately generating images for requirements that require both linguistic and spatial reasoning. LMD also allows the generation of counterfactual text images that the base diffusion model cannot generate (last row).

For more information on LLM-grounded diffusion (LMD), visit our website and read the paper on arXiv.

BibTex

If LLM-based diffusion inspires your work, please credit it:

@articlelian2023llmgrounded,

title=LLM-grounded Diffusion: Enhancing Prompt Understanding of Text-to-Image Diffusion Models with Large Language Models,

author=Lian, Long and Li, Boyi and Yala, Adam and Darrell, Trevor,

journal=arXiv preprint arXiv:2305.13655,

year=2023

[ad_2]

Source link