[ad_1]

As artificial intelligence continues to become more pervasive in our lives, it is important to consider the ethical implications of its use. While AI can enhance and revolutionize the way we live, work and interact with each other, it can also cause harm if not used or developed correctly.

People can be wrongly arrested when facial recognition systems fail in law enforcement and the court system. People can die if self-driving cars don’t see them as pedestrians on the road. Things can go horribly wrong if we don’t think about the implications of how we use and develop these AI-powered tools.

This article is part of a series exploring what AI ethics means, its impact on society, and how businesses can move forward by acting responsibly with AI while reaping its benefits.

In this article, we will focus on what ethics means in the context of artificial intelligence.

What is AI ethics?

Ethics in the context of AI is about doing AI responsibly, considering the following questions:

data label

Artificial intelligence systems today depend on data; This is how machine learning oriented AI systems learn. The basic data you use can significantly affect the behavior of the model. The question is, are we using this data ethically? When thinking about ethics and data, we need to think about the following questions:

- Where does the data you’re looking to use come from? Is this data from your company’s database, the Internet, or a public data bank?

- Is the data collected in the most transparent manner with respect to user privacy? Have users consented to the use of their data for model development?

- Are the data representative of the subgroups of interest?

How you process your data, combine it, and use that data to train models will affect how your models behave downstream. We have seen how the underlying data used by the algorithms can affect the behavior of the model.

Don’t forget if you are using 3rd party mods… the same applies. The way a third-party vendor produces data, aggregates it, and uses that data to train their models affects your downstream applications.

Bottom line: Data labeling applies to both custom-built and third-party models you build.

Explanation

AI explanation is the ability of artificial intelligence systems to provide reasoning about why they have reached a particular decision, prediction, or proposition. Explainability of AI systems may not be critical for many AI applications, such as email spam filtering, grammar correction, and product recommendation systems.

However, in fields such as healthcare, law enforcement, and any field where human life, livelihood, and safety are at stake, evidence and explanation are critical to building trust with AI systems.

For example, if an AI system predicts that a patient has a high risk of lung cancer, Why did he come to this prophecy?? AI decision-making information can help the doctor decide whether the recommendations are reliable.

When it comes to explaining AI, you have to ask, does your system need to be explained, and if so, will you be able to follow its reasoning?

label for use

AI usage etiquette has to do with how you integrate AI into your workflow. Is it the sole decision maker, a human assistant, or a second opinion? How you use AI can make a huge difference to the risks to consumers and society when AI gets it wrong.

For example, when you use AI to filter email spam, it is the sole decision maker. Allowing the AI to be the sole decision maker in this scenario can be considered low risk if it makes the wrong decision. Spam may end up in your inbox, or valid email may be filtered as spam. However, you can still label specific emails as spam in your inbox or view your spam emails.

However, when you are taking on an application field such as medical diagnosis and treatment planning, ask an AI system only Deciding on a cancer treatment plan for a patient is a big risk. Who is to blame if there is a treatment plan ineffective Just trusting the AI tool? a doctor The AI tool or the hospital that decided to use AI in the first place?

In addition, the usage label is also a function of the accuracy of the model. Using a low-accuracy model in high-risk situations poses a higher risk than using a high-accuracy model in low-risk situations.

With all of this in mind, the question to ask here is: What are the risks of using your AI tool as you envision it, given its current performance? Ideally, you want it to have as little negative impact on people as possible when it comes to their security (physical and cyber) and livelihoods. If the risks are high, ask if the risk is worth taking.

development risks

In some cases, the development of an AI tool itself can lead to unintended problems, even if not intended. For example, by developing an AI tool that can guess login passwords As an interesting R&D problem can have unintended consequences when it falls into the hands of a bad actor.

It’s one thing for law enforcement to develop such “dangerous” weapons to catch predators in a confined environment. It’s another matter entirely if the development team intends to open source the tool, essentially releasing it to the public. The latter can have many undesirable consequences, and developers must be responsible not only for how they use the tool, but also for how they use it. Share the tool.

The question you want to ask when it comes to development risks is: Have you considered the risks of developing your AI tool using the intended distribution methods?

The whole word

As artificial intelligence becomes increasingly integrated into our lives, we must consider its ethical implications. In this article, we specifically explore what AI ethics means when building and using AI-enabled tools, and the questions to consider for each ethical element.

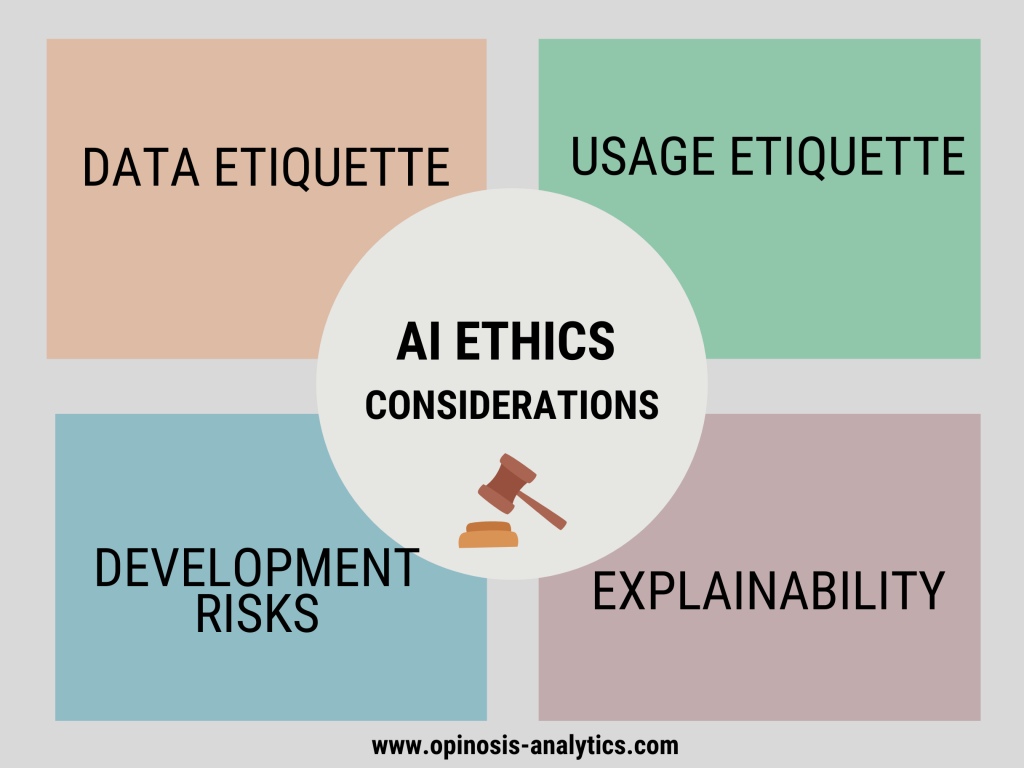

In summary, there are four broad considerations when it comes to the ethics of artificial intelligence, and they are:

- Data label: Is the quality of the data used to train the models known?

- Definition: Can you intuitively explain AI solutions?

- Usage label: Think about how AI will fit into your workflow and what are the risks in this scenario?

- development risks: Have you considered the broader implications of developing and deploying your AI tool?

Each of these views focuses on different aspects of how AI can potentially cause harm. In a future article, we will discuss the common ethical challenges of artificial intelligence systems.

Keep learning and succeed with AI

- Join my AI Integrated Newsletter, which demystifies AI and teaches you how to successfully deploy AI to drive profitability and growth in your business.

- Read the business case for AI Learn the applications, strategies, and best practices to be successful with AI (select companies using the book: government agencies, automakers like Mercedes Benz, beverage manufacturers, and e-commerce companies like Flipkart).

- Work directly with me Improve your organization’s understanding of AI, accelerate AI strategy development, and get meaningful results from every AI initiative.

The post AI Ethics Series: What is AI Ethics appeared first on Opinosis Analytics.

AI Ethics Series: What is AI Ethics?

connected

[ad_2]

Source link