[ad_1]

Code change reviews are a critical part of the software development process at scale, consuming significant time for code authors and code reviewers. As part of this process, a reviewer checks the proposed code and asks the author to modify the code through natural language comments. At Google, we see millions of reviewer comments every year, and authors need an average of ~60 minutes of active shepherding time between submitting a change for review and submitting the final change. In our measurements, the required active work time that a code writer must do to resolve reviewer comments increases almost linearly with the number of comments. However, with machine learning (ML), we have the ability to automate and simplify the code review process, for example by suggesting code changes based on comment text.

Today we describe the latest real-world application of large sequence models to automatically resolve code review comments in the daily development workflow at Google (forthcoming publication). As of today, code change authors at Google address a significant number of reviewer comments using ML’s suggested edits. We expect this to reduce time spent on code reviews by hundreds of thousands of hours each year across Google. Unsolicited, overwhelmingly positive feedback highlights that the impact of code editing offered by ML increases the productivity of Googlers and allows them to focus on more creative and challenging tasks.

Predict code editing

We’ve started training a model that predicts code fixes needed for reviewer comments. The model is pre-trained on various coding tasks and related developer activities (eg, renaming a variable, fixing a broken build, editing a file). It is then fine-tuned for that particular task with reviewed code changes, reviewer comments, and the author’s edits to those comments.

|

| An example of suggested ML edits for common refactoring in the code. |

Google uses a monorepo, a single repository for all of its software artifacts, which allows our training database to contain all the unrestricted code used to build the latest Google software, as well as previous versions.

To improve the quality of the model, we iterated on the training dataset. For example, we compared model performance on datasets with one reviewer comment per file versus datasets with multiple comments per file, and experimented with classifiers to clean training data on a small, curated dataset to select the best model offline. Precision and recall metrics.

Serves infrastructure and user experience

We designed and implemented a feature on top of a trained model, focusing on overall user experience and developer efficiency. As part of this, we explored various user experience (UX) alternatives through a series of user studies. We then refined the feature based on input from internal beta (i.e. feature testing in development) including user feedback (eg, a “Was this helpful?” button next to suggested edits).

The final model was calibrated with a target accuracy of 50%. That is, we set up the model and suggestion filtering so that 50% of suggested edits in our evaluation data set are correct. In general, increasing the target accuracy reduces the number of suggested edits shown, while decreasing the target accuracy results in more incorrect suggested edits. Incorrect suggested edits cost developers time and reduce developer confidence in the feature. We’ve found that a target accuracy of 50% provides a good balance.

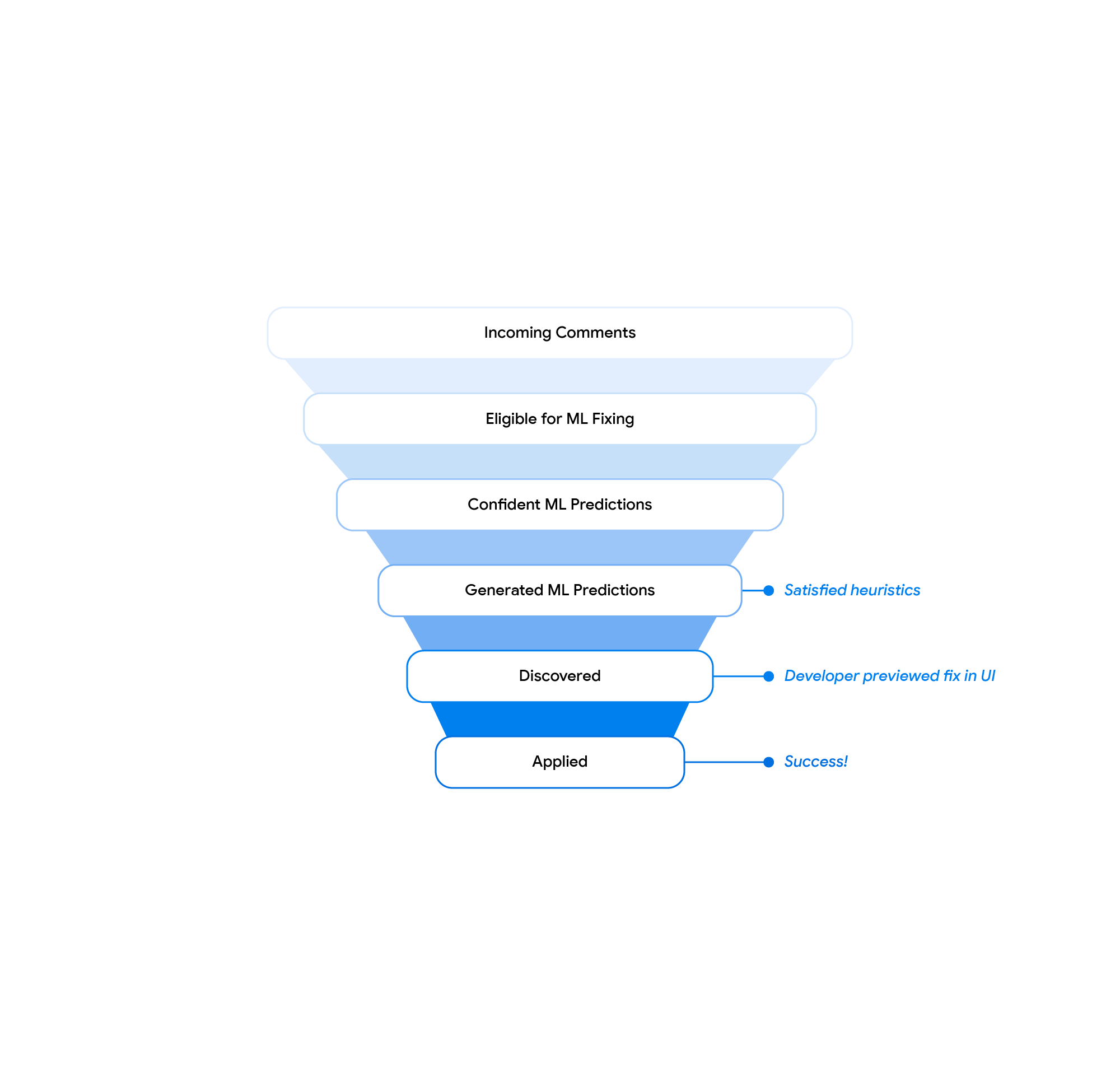

At a high level, for each new reviewer comment, we generate model input in the same format used for training, read the model, and generate suggested code edits. If the model is confident in the prediction and several additional heuristics are satisfied, we send the suggested edits to the downstream systems. The systems below, ie the code review front-end and the integrated development environment (IDE), present suggested edits to the user and record user interactions such as preview and use events. A dedicated pipeline aggregates these logs and generates aggregated insights, eg aggregated acceptance rates, as reported in this blog post.

|

| The architecture of the editing infrastructure proposed by ML. We ingest code and infrastructure from multiple services, take model predictions, and publish predictions to a code review tool and IDE. |

The developer interacts with the edits suggested by ML in the code review tool and IDE. Based on insights from user studies, integration into a code review tool is most suitable for a streamlined review experience. The IDE integration provides additional functionality and supports the 3-way merge of edits suggested by ML (left in the image below) in case of conflicting local changes to the reviewed code state (right) in the merge result (center).

.gif) |

| 3-way merge UX in IDE. |

results

Offline evaluations indicate that the model addresses 52% of comments with a target accuracy of 50%. Online beta metrics and a full internal run confirm these offline metrics, i.e., we see model suggestions on our target model confidence for about 50% of all relevant reviewer comments. Between 40% and 50% of all previewed suggested edits are used by code authors.

We used “unhelpful” feedback during beta to identify patterns of repeated model failure. We implemented a service time heuristic to filter them out and thus reduce the number of incorrect predictions displayed. With these changes, we’ve traded quantity for quality and watched real-world adoption rates increase.

.gif) |

| A code review tool for UX. The suggestion is displayed as part of the comment and can be previewed, used and rated as helpful or not helpful. |

Our beta run showed a The challenge of discovery: Code authors previewed only ~20% of all generated suggested edits. We’ve tweaked the UX and introduced a prominent “Show ML-Edit” button (see figure above) next to a reviewer’s comment, driving the overall review rate up to ~40% at launch. We also found that suggested edits in the code review tool were often not used due to conflicting changes that the author made during the review process. We addressed this with a code review tool button that opens the IDE to a merge view for suggested edits. Now we see that more than 70% of them are used in the code review tool and less than 30% are used in the IDE. All of these changes allowed us to increase the overall fraction of reviewer comments that were reviewed by ML’s suggested edits by a factor of 2, from beta to full internal launch. Across Google, these results help automate the resolution of hundreds of thousands of comments each year.

|

| Offer filtering funnel. |

We see ML’s suggested edits addressing a wide range of reviewer comments in production. This includes simple localized refactoring and refactoring spread throughout the code, as shown in the examples above the blog post. The feature refers to long and less formalized comments that require code generation, refactoring, and importing.

|

| An example proposal for a longer and less formal comment that requires code generation, refactoring, and import. |

The model can also respond to complex comments and generate extensive code edits (shown below). The generated test case follows the existing unit test pattern while modifying the details as described in the comments. In addition, the edit suggests a comprehensive test name that reflects the semantics of the test.

|

| Model’s ability to respond to complex comments and create extensive code edits. |

Conclusion and future work

In this post, we introduced the ML-assist feature to reduce the time spent on code review related changes. At this time, a significant number of comments from all valid code reviews in supported languages have been addressed by suggested edits to ML used at Google. A 12-week A/B experiment across all Google developers will further measure the feature’s impact on overall developer productivity.

We are working on improvements throughout the stack. This includes increasing model quality and recall and creating a more streamlined experience for the developer with improved discovery throughout the review process. As part of this, we’re exploring an option to show suggested edits to reviewers before they create comments, and we’re expanding the feature in the IDE to allow code change authors to accept suggested code edits for natural language commands.

Acknowledgments

It is the work of many people from the Google Core Systems & Experiences team, Google Research, and DeepMind. We would like to specifically thank Peter Choi for his cooperation and all our team members for key contributions and helpful advice, including Marcus Revai, Gabriela Surita, Maxim Tabachnik, Jacob Austin, Nimesh Gelani, Dan Zheng, Peter Josling. , Mariana Stariolo, Chris Gorgolewski, Sasha Warkewisser, Katja Grunwedel, Alberto Elizondo, Tobias Welp, Paige Bailey, Pierre-Antoine Manzagol, Pascal Lamblin, Chengji Gu, Petros Maniatis, Henrik Michalewski, Sarah Wiltbergaon, Sarah Wiltbergaon, A Madh Yevskiy. , Niranjan Tulpule, Zubin Ghahraman, Juanjo Karin, Danny Tarlow, Kevin Villela, Stoyan Nikolov, David Tattersall, Boris Bokovsky, Kathy Nix, Mehdi Ghisas, Louis K. Cobo, Yujia Li, David Choi, Christoph Molnander, Weitel, Brett Wiltshire, Laurent Le Brun, Mingpan Guo, Herman Lowes, Jonas Matthes, Savin Dance.

[ad_2]

Source link