[ad_1]

Author: Chris Mauk, Jonas Muller

This article shows how data-driven AI tools can improve a sophisticated large language model (LLM; a.k.a. Foundation model). These tools optimize the database itself rather than changing the model architecture/hyperparameters – running the exact same tuned code on the optimized database boosts test suite performance by 37% on the courtesy classification task studied here. We achieve similar accuracy through the same data-driven AI process in LLM 3 latest models that can be fine-tuned with the OpenAI API: Davinci, Ada, and Curie. These are variants of GPT-3/ChatGPT base LLM.

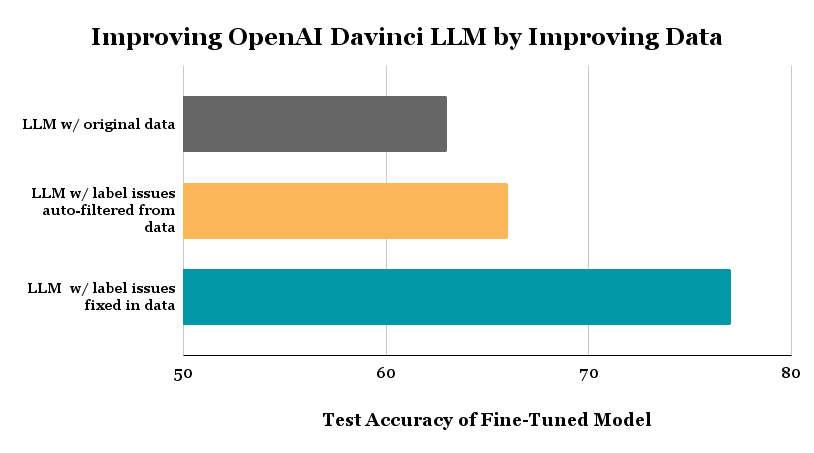

The graph above shows the test accuracy achieved for 3-class text courtesy classification with the same LLM refined code (DaVinci setup via OpenAI API) running on 3 different datasets: (1) original dataset labeled by human annotators, ( 2 ) an automatically filtered version of this data set, in which we removed examples automatically scored as mislabeled by Confident Learning, (3) a cleaned version of the original data, in which we manually labeled examples scored as mislabeled (rather than filtering this examples).

background

Annotated data powers AI/ML in the enterprise, but real-world datasets have been found to contain 7-50% annotation errors. Imperfectly labeled text data hampers the training (and evaluation) of ML models on tasks such as intent recognition, entity recognition, and sequence generation. Although pre-trained LLMs are equipped with a lot of world knowledge, their performance is adversely affected by noisy training data (as pointed out by OpenAI). Here, we demonstrate a data-driven technique to reduce the effects of label noise without changing the model architecture, hyperparameters, or training code. Thus, these data quality improvement techniques should remain valid for future advanced LLMs such as GPT-10 as well.

LLMs acquire strong generative and discriminative abilities after prior training on most texts available on the Internet. However, ensuring that LLM produces reliable results for a specific business use case often requires additional training on actual data from that domain labeled with the desired results. This domain training is known as Fine tuning LLM and can be done through the APIs offered by OpenAI. Flaws in the data annotation process inevitably lead to label errors in these domain-specific training data, challenging the proper specification and evaluation of LLM.

Here are quotes from OpenAI about their strategy for training state-of-the-art AI systems:

“Since the training data shapes the capabilities of any model being trained, data filtering is a powerful tool for limiting unwanted model capabilities.“

“We prioritized filtering out all the bad data rather than leaving all the good data. This is because we can always refine our model with more data later to teach it new things, but it is much harder for a model to forget what it has already learned.”

Obviously, data quality is vital. Some organizations, like OpenAI, manually sort through the issues in their data to produce the best models, but it’s a ton of work! Data-driven AI is the emerging science of algorithms to detect data problems so you can systematically improve your database more easily through automation.

Our LLM in these experiments is the Davinci model from OpenAI, which is their most efficient GPT-3 model on which ChatGPT is based.

Here we consider a 3-class variant of the Stanford politeness data set that has text phrases like: Immoral, neutralor polite. Annotated by human raters, some of these labels are inherently low quality.

This article goes through the following steps:

- Use original data to specify various cutting-edge LLMs via the OpenAI API: Davinci, Ada and Curie.

- Determine the baseline accuracy of each refined model on the test set with high-quality labels (determined by consensus and high agreement among many human annotators who evaluated each test instance).

- Use self-learning algorithms to automatically identify hundreds of mislabeled examples.

- Extract data from the dataset of automatically labeled issues and then specify the exact same LLMs in the automatically filtered dataset. This simple step reduces the error in Davinci model predictions by 8%!

- introduced to A without code A solution to efficiently fix label errors in a dataset, and then specify the exact same LLM in a fixed dataset. This reduces the error in Davinci model predictions by 37%!

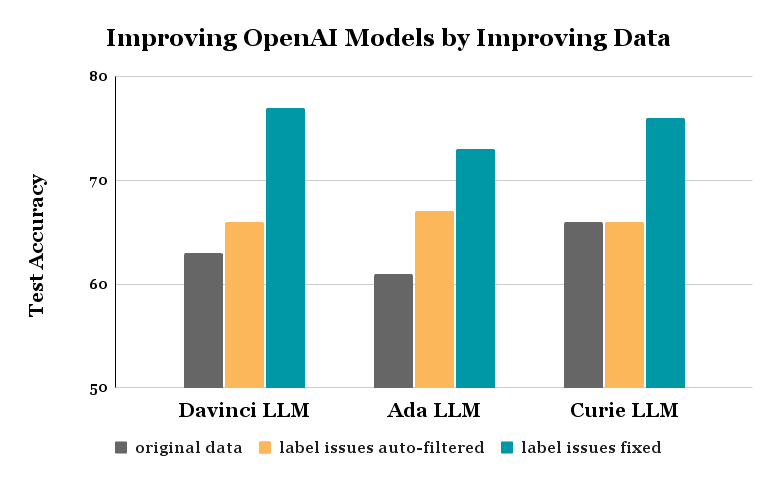

Similar gains are achieved through the same processes for the Ada and Curie models – in all cases nothing has changed in either the model or the specification code!

Here is a notebook where you can reproduce the results shown in this article and understand the code to implement each step.

You can download the train and test suite here: train test

Our training dataset contains 1916 examples, each labeled by a single human annotator, and thus some may be unreliable. The test data set contains 480 examples, each labeled by five annotations, and we use their consensus labels as a high-quality approximation of the true politeness (a measure of test accuracy relative to these consensus labels). To ensure a fair comparison, this test data set remains fixed throughout our experiments (all label cleaning/data modification is done on the training set only). We will reformat these CSV files into the jsonl file type required by OpenAI’s sophisticated API.

Here’s what our code looks like to specify the Davinci LLM 3-class classification and evaluate its test accuracy:

!openai api fine_tunes.create -t "train_prepared.jsonl" -v "test_prepared.jsonl" --compute_classification_metrics --classification_n_classes 3 -m davinci

--suffix "baseline"

>>> Created fine-tune: ft-9800F2gcVNzyMdTLKcMqAtJ5After the job is done, we read the fine_tunes.results endpoint to see the test accuracy achieved by fine-tuning this LLM on the original training dataset.

!openai api fine_tunes.results -i ft-9800F2gcVNzyMdTLKcMqAtJ5 > baseline.csv

df = pd.read_csv('baseline.csv')

baseline_acc = df.iloc[-1]['classification/accuracy']

>>> Fine-tuning Accuracy: 0.6312500238418579Our baseline Davinci LLM achieves a test accuracy of 63% when well fitted to the raw training data, possibly with noisy labels. Even state-of-the-art LLMs such as the Davinci model produce unsatisfactory results for this classification task because the data labels are noisy?

Confident Learning is a newly developed set of algorithms to assess which data are mislabeled in a classification database. These algorithms require out of sample Predict the class probabilities for all of our training examples and use a new form of calibration to determine when to trust the model for a given label in the data.

To obtain these estimated probabilities, we:

- Use the OpenAI API to compute embeddings from the Davinci model for all of our training examples. You can download the builds here.

- Fit a logistic regression model to the constructs and labels in the original data. We use 10-fold cross-validation, which allows us to generate sample predicted class probabilities for each example in the training data set.

# Get embeddings from OpenAI.

from openai.embeddings_utils import get_embedding

embedding_model = "text-similarity-davinci-001"

train["embedding"] = train.prompt.apply(lambda x: get_embedding(x, engine=embedding_model))

embeddings = train["embedding"].values

# Get out-of-sample predicted class probabilities via cross-validation.

from sklearn.linear_model import LogisticRegression

model = LogisticRegression()

labels = train["completion"].values

pred_probs = cross_val_predict(estimator=model, X=embeddings, y=labels, cv=10, method="predict_proba")The cleanlab package provides an open source Python implementation of Confident Learning. With a single line of code, we can run confident learning using the model’s predicted probabilities to estimate which examples have label problems in our training dataset.

from cleanlab.filter import find_label_issues

# Get indices of examples estimated to have label issues:

issue_idx = find_label_issues(labels, pred_probs,

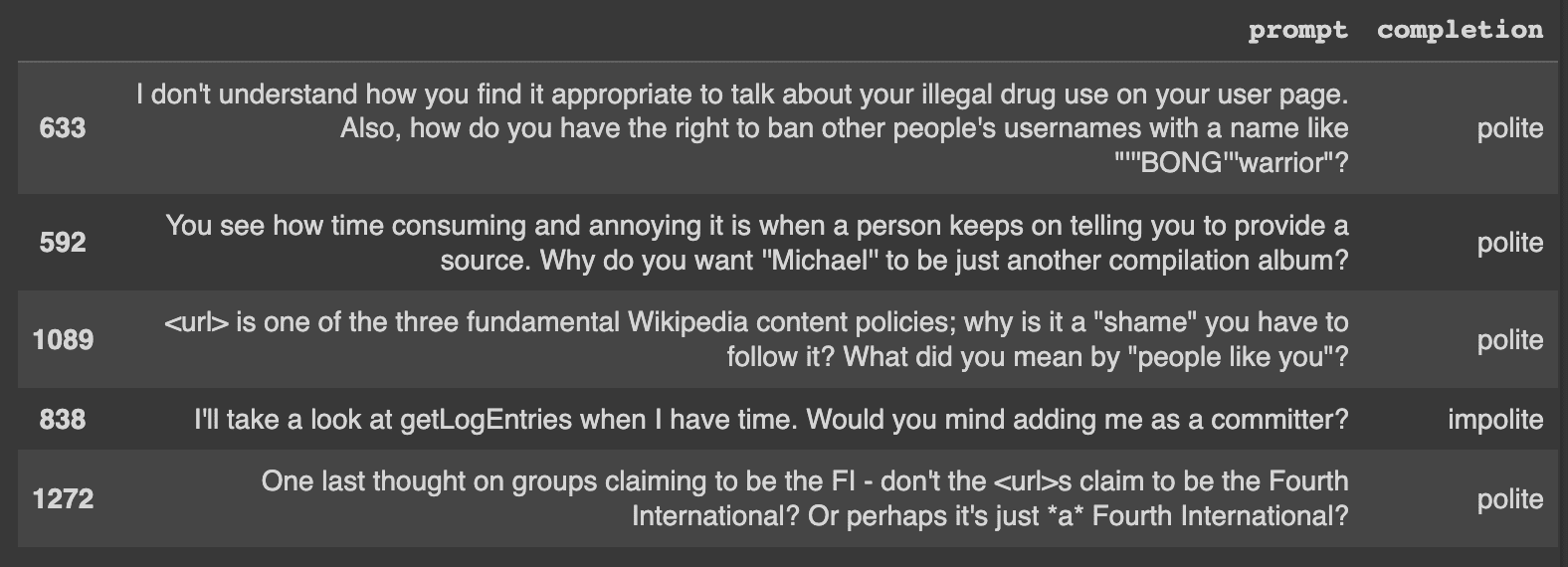

return_indices_ranked_by='self_confidence') # sort indices by likelihood of label error Let’s take a look at some label problems that are automatically identified in our database. Here’s an example that’s clearly mislabeled:

- phrase: I’ll look into getLogEntries when I have time. Would you mind adding me as an artist?

- label: Immoral

Labeling errors like this are the reason why we may see poor model results.

Caption: Some basic errors that were automatically detected.

Note: find_labels_issues can determine which of the given Labels Potentially invalid only out of sample pred_probs.

Now that we have the indices of potentially mislabeled examples (identified by automated techniques), let’s remove these 471 examples from our training dataset. The exact same Davinci LLM specification on the filtered dataset achieves a test accuracy of 66% (on the same test data where our original Davinci LLM achieved 63% accuracy). we Reduced model error rate by 8% using less But better quality Training data!

# Remove data flagged with potential label error.

train_cl = train.drop(issue_idx).reset_index(drop=True)

format_data(train_cl, "train_cl.jsonl")

# Train a more robust classifier with less erroneous data.

!openai api fine_tunes.create -t "train_cl_prepared.jsonl" -v "test_prepared.jsonl" --compute_classification_metrics --classification_n_classes 3 -m davinci --suffix "dropped"

# Evaluate model on test data.

!openai api fine_tunes.results -i ft-InhTRQGu11gIDlVJUt0LYbEx > autofiltered.csv

df = pd.read_csv('autofiltered.csv')

dropped_acc = df.iloc[-1]['classification/accuracy']

>>> 0.6604166626930237Instead of automatically fixing auto-detected label problems through filtering, a smarter (though more difficult) way to improve our dataset would be to manually fix label problems. This simultaneously removes a noisy data point and adds an accurate one, but making such corrections manually is difficult. We did this manually using Cleanlab Studio, an enterprise data correction interface.

After replacing the bad labels we find with more suitable ones, we edit the exact same Davinci LLM into the manually edited database. The resulting model achieves 77% Accuracy (on the same test dataset as before), which is a 37% error reduction From our original version of this model.

# Load in and format data with the manually fixed labels.

train_studio = pd.read_csv('train_corrected.csv')

format_data(train_studio, "train_corrected.jsonl")

# Train a more robust classifier with the fixed data.

!openai api fine_tunes.create -t "train_corrected_prepared.jsonl" -v "test_prepared.jsonl"

--compute_classification_metrics --classification_n_classes 3 -m davinci --suffix "corrected"

# Evaluate model on test data.

!openai api fine_tunes.results -i ft-MQbaduYd8UGD2EWBmfpoQpkQ > corrected .csv

df = pd.read_csv('corrected.csv')

corrected_acc = df.iloc[-1]['classification/accuracy']

>>> 0.7729166746139526Note: Throughout this process, we will never change the code model architecture/hyperparameters, training or data preprocessing! All improvements come strictly from increasing the quality of our training data, leaving room for additional optimizations on the modeling side.

We repeated the same experiment with two other recent LLM models that OpenAI offers for fine-tuning: Ada and Curie. The resulting improvements are similar to those achieved by the Davinci model.

Data-driven AI is a powerful paradigm for processing noisy data with AI/automated techniques rather than tedious manual efforts. There are now tools to help you efficiently find and resolve data and labeling issues to improve any ML model (not just LLM) for most data (not just text, but images, audio, tabular data, etc.). such tools to use Diagnose/fix any ML model data and then improve the data for that Any other ML model. These tools will remain valid in ML models with future advancements like GPT-10, and will only get better at identifying problems when using more accurate models!

Practice data-driven AI to systematically develop better data through AI/automation. This allows you to leverage your unique domain knowledge rather than correct generic data such as label errors.

Chris Mauk is a data scientist at Cleanlab.

[ad_2]

Source link