[ad_1]

The ethics of artificial intelligence is the responsible release and implementation of artificial intelligence, paying attention to several considerations. data label that Tool development risks, As discussed in the previous article. In this article, we discuss some of the ethical issues that arise with artificial intelligence systems, especially machine learning systems, when we ignore the ethical considerations of artificial intelligence, often inadvertently.

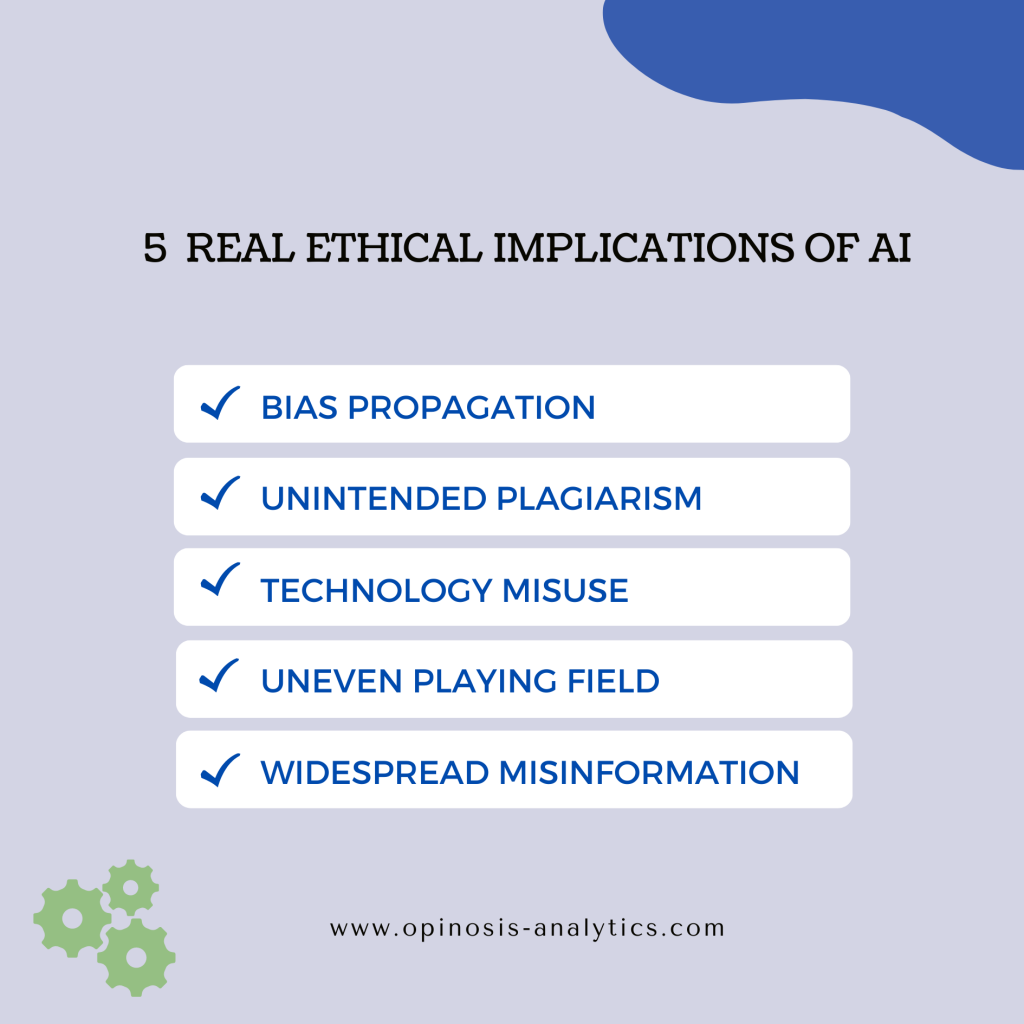

5 Common AI Ethical Issues

1. Prevalence of bias

While there is a strong belief that algorithms are less biased than humans, AI systems have been known to propagate our conscious and unconscious biases.

For example, there are known recruiting tools that have algorithmically “learned” to fire female candidates because they learned that men are preferred in the technical workforce.

Even facial recognition systems are notorious for making disproportionate errors in minority groups and people of color. For example, when researcher Joey Bulamwin studied the accuracy of facial recognition systems from various companies, he found that the error rate for light-skinned men was no more than 1%. However, for women with dark skin, the errors were much more significant and reached 35%. Even the most famous artificial intelligence systems have not been able to accurately identify female celebrities of color.

So what is the root cause of AI bias?

data. AI systems today are only as good as the data they are trained on; If the data is unrepresentative, biased towards a particular group, or somehow unbalanced, the AI system will examine this non-representation and propagate the bias.

Data bias can be caused by a number of factors. For example, if historically certain groups of people have been discriminated against, this discrimination will be very well captured in the data.

Another cause of data bias can be a company’s data storage processes, or lack thereof, which causes AI systems to learn from skewed rather than representative data samples. Even using a snapshot of the Internet to train your models can mean you’ve learned a bias in that snapshot. This is why great language models (LLMs) are not free from bias when they read subjective topics.

Bias in data can also be a development error where the data used to develop the model were not properly selected, resulting in imbalanced subgroup samples.

Bottom line: When there is limited oversight over the quality of the data used to train the model, various unintended biases can occur. We may not know when and where, especially in an unlimited multitasking case like the LLM.

2. Inadvertent plagiarism

Generative AI tools like GPT-3 and ChatGPT learn from massive amounts of web data. These tools make it more likely to produce meaningful content. In doing so, these generative AI tools can replicate content on the web word for word without any attribution.

How do we know that the generated content is, in fact, unique? What if the uniquely generated text is identical to the source on the Internet? Can a source claim plagiarism?

We already see this issue with artwork generators that learn large amounts of artwork from different artists. An AI tool could end up generating art that combines the work of multiple artists.

Ultimately, who exactly owns the copyright to the art produced? If the work is too similar to an existing one, it may lead to copyright infringement.

Bottom line: Using the web and public databases to develop models can lead to unwanted plagiarism. However, due to little AI regulation in the world, we currently have no enforceable decisions.

3. Abuse of technology

Recently, Ukraine’s head of state was portrayed as saying things he didn’t actually say, the so-called. using the tool DeepFax. This AI tool can create videos or pictures of people saying things they never actually said. Likewise, AI image generator tools like DALL.E and Stable Diffusion can be used to create incredibly realistic images of events that never happened.

Such intelligent tools can be used as weapons of war (as we have already seen), to spread disinformation for political advantage, to manipulate public opinion, to commit fraud, and more.

There is AI in all of this No A bad actor, he does what he is made to do. Bad actors are people who abuse artificial intelligence for their own benefit. Furthermore, the companies or teams that create and distribute these AI tools have not considered the wider impact of these tools on society, which is also a problem.

Bottom line: While the misuse of technology is not exclusive to AI, as AI tools are so adept at replicating human capabilities, it is possible for misuse of AI to go unnoticed and have lasting effects on how we view the world.

4. Uneven playing fields

Algorithms can be easily cheated, and the same goes for AI-powered software, where you can cheat the underlying algorithms to gain an unfair advantage.

In a LinkedIn post I published, I discussed how people can trick AI hiring tools when you reveal the attributes that the system will use in the decision-making process.

While taking steps to expose AI-powered hiring decision-making is a well-intentioned step toward promoting transparency, it can allow people to game the system. For example, candidates may learn that certain keywords are preferred in the hiring process and fill their resume with those keywords, unfairly outperforming more qualified candidates.

We see this on a much larger scale in the SEO industry, which is valued at over $60 billion. Getting a high ranking in Google’s eyes these days isn’t just about having content worth reading. But also the function of doing “good SEO” and thus the growing popularity of this industry.

SEO services have allowed organizations with hefty budgets to dominate the ranks as they can invest heavily in creating massive amounts of content, performing keyword optimization and placing links widely.

While some SEO practices are simple content optimization, others “trick” search algorithms into believing that their websites are best in class, most authoritative, and will provide the best value to readers. This may or may not be true. Companies with high ratings can only have Invest more in SEO.

Bottom line: Gaming AI algorithms is one of the easiest ways to gain an unfair advantage in business, careers, influence ships and politics. People who figure out how your algorithm “works” and make decisions can abuse and game the system.

5. Widespread misinformation

As we rely more and more on answers and content generated by artificial intelligence systems, the “facts” produced by these systems can be considered the ultimate truth. For example, in Google’s demo of their generative AI system, Bard, it includes three points to ask, “What new discoveries from the James Webb Space Telescope can I tell my 9-year-old?” One of the items said the telescope “captured the first images of a planet outside our solar system.” However, later astronomers very publicly pointed out that this was not the case. Direct use of results from such systems can lead to widespread misinformation.

Unfortunately, without proper citations, it’s not easy to fact-check and decide which answers to trust and which not. And as more people consume content generated without question, it can lead to the spread of false information on a much wider scale than seen in traditional search engines.

The same goes for content written by generative AI systems. Previously, human ghostwriters had to research information from reliable sources, organize it in a meaningful way, and cite the sources before publication. But now they can have entire articles written by an AI system. Unfortunately, if an AI-generated article is published without further fact-checking, misinformation will inevitably spread.

Bottom line: Overreliance on AI-generated content without an element of human fact-checking will have long-term effects on our worldview due to the non-factual information we consume over time.

Summary

In this article, we explore some potential ethical issues that may arise from artificial intelligence systems, particularly machine learning systems. We discussed how:

- AI systems can propagate racial, gender, age, and socioeconomic biases

- AI can violate copyright laws

- Artificial intelligence can be used in unethical ways to harm others

- AI can be tricked, leveling the playing field for people and businesses

- Blindly trusting the answers of AI systems can lead to widespread misinformation

It is important to note that there were many of these problems not on purpose was created, but it is a side effect of how these systems were designed, disseminated and used in practice.

While we cannot completely eliminate these ethical problems, we can certainly take steps in the right direction to minimize the problems created by technology, and in this case artificial intelligence.

By understanding the ethical dilemmas of artificial intelligence, let us focus on developing strategies for more responsible development and deployment of AI systems. Rather than waiting for government regulation, in an upcoming article, we’ll explore how businesses can lead the way in implementing AI responsibly.

Keep learning and succeed with AI

- Join my AI Integrated Newsletter, which demystifies AI and teaches you how to successfully deploy AI to drive profitability and growth in your business.

- Read the business case for AI Learn the applications, strategies, and best practices to be successful with AI (select companies using the book: government agencies, automakers like Mercedes Benz, beverage manufacturers, and e-commerce companies like Flipkart).

- Work directly with me Improve your organization’s understanding of AI, accelerate AI strategy development, and get meaningful results from every AI initiative.

connected

[ad_2]

Source link