[ad_1]

The GPU Squeeze continues to place a premium on Nvidia H100 GPUs. In a recent Financial Times article, Nvidia reports that it expects to ship 550,000 of its latest H100 GPUs worldwide in 2023. The appetite for GPUs is obviously coming from the generative AI boom, but the HPC market is also competing for these accelerators. It is not clear if this number includes the throttled China-specific A800 and H800 models.

The bulk of the GPUs will be going to US technology companies, but the Financial Times notes that Saudi Arabia has purchased at least 3,000 Nvidia H100 GPUs and the UAE has also purchased thousands of Nvidia chips. UAE has already developed its own open-source large language model using 384 A100 GPUs, called Falcon, at the state-owned Technology Innovation Institute in Masdar City, Abu Dhabi.

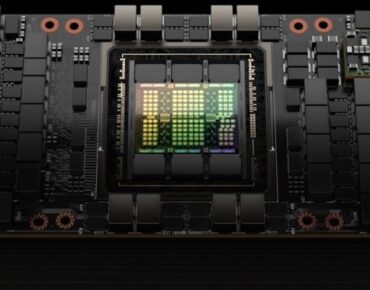

The flagship H100 GPU (14,592 CUDA cores, 80GB of HBM3 capacity, 5,120-bit memory bus) is priced at a massive $30,000 (average), which Nvidia CEO Jensen Huang calls the first chip designed for generative AI. The Saudi university is building its own GPU-based supercomputer called Shaheen III. It employs 700 Grace Hopper chips that combine a Grace CPU and an H100 Tensor Core GPU. Interestingly, the GPUs are being used to create an LLM developed by Chinese researchers who can’t study or work in the US.

Meanwhile, generative AI (GAI) investments continue to fund GPU infrastructure purchases. As reported, in the first 6 months of 2023, funding to GAI start-ups is up more than 5x compared to full-year 2022 and the generative AI infrastructure category has seen over 70% of the funding since Q3’22.

Worth the Wait

The cost of a H100 varies depending on how it is packaged and presumably how many you are able to purchase. The current (Aug-2023) retail price for an H100 PCIe card is around $30,000 (lead times can vary as well.) A back-of-the-envelope estimate gives a market spending of $16.5 billion for 2023 — a big chunk of which will be going to Nvidia. According to estimates made by Barron’s senior writer Tae Kim in a recent social media post estimates it costs Nvidia $3,320 to make a H100. That is a 1000% percent profit based on the retail cost of an Nvidia H100 card.

The Nvidia H100 PCIe GPU.

As often reported, Nvidia’s partner TSMC can barely meet the demand for GPUs. The GPUs require a more complex CoWoS manufacturing process (Chip on Wafer on Substrate — a “2.5D” packaging technology from TSMC where multiple active silicon dies, usually GPUs and HBM stacks, are integrated on a passive silicon interposer.) Using CoWoS adds a complex multi-step, high-precision engineering process that slows down the rate of GPU production.

This situation was confirmed by Charlie Boyle, VP and GM of Nvidia’s DGX systems. Boyle states that delays are not from miscalculating demand or wafer yield issues from TSMC, but instead from the chip packaging CoWoS technology.

HPC in the Great GPU Squeeze

As evidenced by reduced availability, huge purchase quantities, and rising prices, the great GPU Squeeze has begun to affect the HPC market. An important industry expert discussion on this topic will be part of the September 26-27, 2023, HPC on Wall Street event.

This long-standing east coast event features HPC maven Jay Boisseau as emcee. Jay is the Former Associate Director for Scientific Computing of the San Diego Supercomputing Center, the Founding Director of the Texas Advanced Computing Center (TACC, the fastest academic supercomputing center in the US), and former HPC & AI Technology strategist for Dell.

Please join Jay and many global financial luminaries, HPC experts, firms, and leading technology companies investing in FinTech solutions. The event will have four important sessions (including an HPC Squeeze panel). The sessions are expected to include:

- From Open Source to Third Party: Capturing the Early Potential of Gen AI for FinServ

- Quantum Computing Analyst Panel – One Year Later

- HPC in the Great GPU Squeeze

- The Data Management Challenges with Generative AI

Register now for HPC + AI Wall Street on September 26-27 at the InterContinental Times Square, NYC.

Editors Note: Tabor Communications, publishers of HPCwire and EnterpriseAI, also produces the HPC + AI Wall Street event.

This article first appeared on sister site HPCwire.

Related

CoWoS,CUDA,DGX,finserv,H100,HBM,HPC AI Wall Street,HPC on Wall Street,NVIDIA,Nvidia GPUs,Saudi Arabia,UAE

[ad_2]

Source link