[ad_1]

While inflation is down from its high of 9% last June, American companies are still grappling with rising costs. Companies that can raise their prices to balance the ledger are doing so, but others are addressing inflation another way: by boosting employee productivity through conversational AI, or chatbots.

According to Moveworks CEO and Co-Founder Bhavin Shah, companies that have adopted conversational AI have raised their employee productivity by an amount roughly equal to total inflation over the past several years.

“Stanford and MIT did a study around workers using AI tools and they saw that it was able to increase workers’ productivity by 14%,” Shah said during his opening remarks for Moveworks Live last week. “Why is that important? Because that’s perhaps about the amount of inflation that we saw over the last three to five years.”

The paper Shah referenced, titled “Generative AI at Work,” concludes that customer support agents were able to get 13.8% more work done by using a conversational assistant based on OpenAI’s Generative Pre-trained Transformer (GPT) model.

Specifically, the AI assistant did three things: reduced the amount of time it took an agent to handle an individual chat; bolstered the total number of chats an agent can handle per hour; and ultimately resulted in “a small increase in the share of chats that are successfully resolved,” the researchers wrote.

Interestingly, novice workers benefitted from AI to a higher degree than more experienced agents, the researchers found. They found that agents with two months of training and an AI assistant could perform at the same level as agents with over six months of training but no assistant.

Moveworks CEO and co-founder Bhavin Shah

Shah co-founded Moveworks in 2016 to help companies leverage AI to build conversational assistants. That was a bad year to start such a company, Shah acknowledged. “In 2016, chatbots were declared dead,” he said. “Most people were skeptical.”

However, something unexpected happened a year later: Google released its Transformer paper, which kicked off the current trend of generative AI.

“What it did was create a new architecture allowing us to train new models in a parallelized fashion,” Shah said. “It shifted from being memory-bound to GPU- and CPU-bound, which allowed us to do greater levels of parallelization, but also allowed us to build bigger models with larger training sets.”

As Moveworks employed various neural networks based on Google’s new Transformer architecture to build custom conversational agent applications on behalf of its customers, another unplanned world event kicked AI into another gear: Covid-19.

When companies went into lockdown, they searched for new work modalities, and found chatbots were much better than they remembered. When lockdowns began to lift a year later and people began migrating between jobs in the Great Resignation and the Great Reshuffle, conversational AI was already a topic of discussion in the boardroom.

“Companies created more and more folks with titles around employee experience,” Shah said. “And they began to realize that employee productivity and employee experience were actually two sides of the same coin.”

Bolstered by the Transformer breakthrough, generative AI models for images and text made loads of technological progress over this period. Hugging Face alone has over 13,000 publicly available models, Shah pointed out. “And this is arguably before the Cambrian Explosion that we’re all now witnessing in terms of large language models around the world.”

Moveworks helps companies developed enterprise co-pilots

The end of 2022 brought the next inflection point: The introduction of ChatGPT. Now instead of just a handful of AI researchers talking about the benefits of neural networks, billions of consumers were using LLMs on a daily basis.

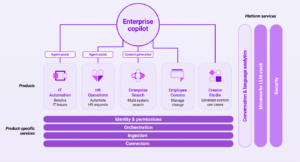

While ChatGPT is fun to play with, Moveworks uses it and other LLMs to help build conversational assistants, or “copilots,” that can boost employee productivity across a range of use cases. We are just at the beginning stage of this workplace revolution, and Moveworks hopes to enable that revolution with its new Creator Studio, which it launched just three weeks ago.

“By connecting people with systems at the speed of conversation, we’re going to make it easier than ever for people to do their jobs,” Shah said. “Soon, very soon, every application will have its own copilot.”

AI-based copilots are already popping up like mushrooms after a rain. “It seems like I can’t go two days without another announcement,” Shah quipped. But that rapid spread of AI copilots also presents an opportunity for an enterprise copilot that can work across different applications, he said.

Much more work is needed to fully realize the potential of language interfaces in the enterprise, however. For instance, if an employee wants to know what happened to his bonus, it’s unclear which application copilot he should check with. Is it the one for the HR app, the applicant tracking system, the fiancé department, or the payroll system?

“And that is where there begins to be a new opportunity: an enterprise-wide copilot…where your employees can connect to every business system, to every business cloud, through a single place,” he said.

Inflation seems to be settling in at 5% annually for the long haul, which would give executives plenty of reason to look to AI for a productivity boost. But if the dream of an enterprise-wide AI system comes to fruition in a repeatable and automatable way, 5% would be the rounding error.

This story originally appeared on sister site Datanami.

Related

[ad_2]

Source link