[ad_1]

Automatic Speech Recognition (ASR) is a well-established technology that has been widely adopted for a variety of applications such as conference calls, streaming video transcription, and voice commands. Although the challenges of this technology are focused on noisy Audio inputs, visual Multimodal video streams (eg, TV, online edited videos) can provide powerful cues to improve the robustness of ASR systems – this is called audiovisual ASR (AV-ASR).

Although lip movement can provide strong signals for speech recognition and is the most common area of focus for AV-ASR, the face is often not directly visible Videos in the wild (e.g., due to egocentric views, face masking, and low resolution) and is therefore a new area of research. unlimited AV-ASR (eg AVATAR) that examines the contribution of the entire visual frame, not just the mouth.

However, creating audiovisual datasets for training AV-ASR models is difficult. Datasets such as How2 and VisSpeech have been created from online training videos, but they are small in size. In contrast, the models themselves are typically large and consist of both visual and audio coding, so they overfit these small datasets. However, a number of large-scale audio-only models that have been heavily optimized through massive training have recently been released. Audio only Data from audiobooks such as LibriLight and LibriSpeech. These models contain billions of parameters, are readily available, and show strong generalization across domains.

Considering the above challenges, in “AVFormer: Injecting Vision into Frozen Speech Models for Zero-Shot AV-ASR”, we present a simple method to enhance the visual information of existing large-scale audio-only models and at the same time performance. Light domain adaptation. AVFormer provides visual embeddings in a frozen ASR model (much like Flamingo provides visual information in large language models for vision-to-text tasks) using lightweight training adapters that can be trained on small amounts of weakly labeled video data with minimal additional training and parameters. We also introduce a simple curriculum scheme during training, which we show is critical for the model to be able to jointly process audio and visual information efficiently. The resulting AVFormer model achieves state-of-the-art zero-shot performance on three different AV-ASR benchmarks (How2, VisSpeech, and Ego4D), while crucially maintaining decent performance only on traditional audio speech recognition benchmarks (ie, LibriSpeech).

|

| Unlimited audiovisual speech recognition. We implement vision into a frozen-speech model (BEST-RQ, in gray) for zero audiovisual ASR via lightweight modules to create a parameter- and data-efficient model called AVFormer (blue). Visual context can provide useful cues for powerful speech recognition, especially when the audio signal is noisy (a visual loaf of bread helps to correct an audio-only error of “clove” and “bread” in the generated transcript). |

Vision injection using lightweight modules

Our goal is to add visual comprehension capabilities to the existing audio-only ASR model while maintaining its generalizability to different domains (both AV and audio-only domains).

To achieve this, we augment an existing state-of-the-art ASR model (Best-RQ) with the following two components: (i) a linear vision projector and (ii) light transducers. The first maps visual features into the embedding space of audio cues. This process allows the model to correctly combine separate pre-trained visual features and representations of audio input cues. The latter then minimally modifies the model to add understanding of multimodal input from video. We then train these additional modules on unlabeled web videos from the HowTo100M dataset, with the results of the ASR model as a pseudo basis, while the rest of the Best-RQ model is frozen. Such lightweight modules enable data efficiency and strong performance generalization.

We evaluated our extended model on AV-ASR benchmarks in a zero-shooting setting where the model is never trained on a manually annotated AV-ASR dataset.

Curriculum learning for vision injection

After initial evaluation, we empirically found that with a naive single round of joint training, the model struggles to learn adapters and visual projectors simultaneously. To alleviate this problem, we introduce a two-phase training strategy that combines these two factors—domain adaptation and visual feature integration—and trains the network sequentially. In the first phase, the adapter parameters are optimized without feeding visual cues. After training the adapters, we add visual cues and train the visual projection layers alone in the second phase, while the trained adapters are kept frozen.

The first stage focuses on the adaptation of the audio domain. In the second phase, the adapters are completely frozen and the visual projector just needs to learn to generate a visual request that maps the visual cues into the audio space. In this way, our curriculum learning strategy allows the model to incorporate visual data as well as adapt to new audio domains in AV-ASR standards. We use each phase only once because repeated use of alternating phases causes performance degradation.

|

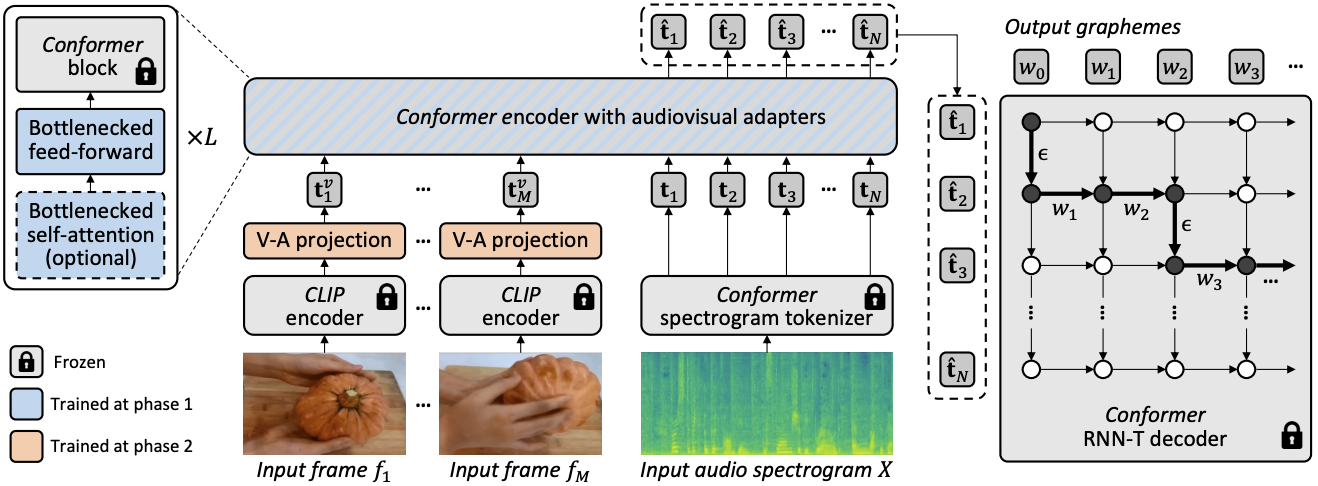

| Common architecture and training procedure for AVFormer. The architecture consists of a frozen Conformer encoder-decoder model and a frozen CLIP encoder (frozen layers shown in gray with block symbols), along with two lightweight modules – (i) visual projection layer (orange) and bottle adapters (blue) to enable multimodal domain adaptation. We propose a two-phase curriculum learning strategy: the adapters (blue) are first trained without visual cues, after which the visual projection layer (orange) is adjusted while all other parts are kept frozen. |

The figures below show that without learning the curriculum, our AV-ASR model performs worse than the audio-only baseline in all datasets, with the gap increasing as visual cues are added. In contrast, when the proposed two-phase learning plan is applied, our AV-ASR model performs significantly better than the baseline audio-only model.

|

| Curriculum Learning Effects. The red and blue lines are for audiovisual models and are shown in dataset 3 at zero-shot settings (smaller WER % is better). Using the curriculum helps in all three datasets (only audio performance is critical for How2 (a) and Ego4D (c)). Performance improves up to 4 visual cues, at which point it saturates. |

Results in zero AV-ASR

We compare AVFormer with BEST-RQ, the audio version of our model, and AVATAR, the state-of-the-art AV-ASR, for zero-hit performance on three AV-ASR benchmarks: How2, VisSpeech, and Ego4D. AVFormer outperforms AVATAR and BEST-RQ for all, even outperforming both AVATAR and BEST-RQ when trained on LibriSpeech and the full HowTo100M set. This is noteworthy because for BEST-RQ this involves training 600M parameters, while AVFormer only trains 4M parameters and thus requires only a small fraction of the training data set (5% of HowTo100M). Additionally, we also evaluate performance on LibriSpeech, which is audio only, and AVFormer outperforms both baselines.

.png) |

| Comparison of state-of-the-art methods for zero-shot performance in different AV-ASR datasets. We also show performances on LibriSpeech, which is audio only. Results are reported as WER % (lower is better). AVATAR and BEST-RQ are perfectly fine-tuned to the end (all parameters) on HowTo100M, and AVFormer performs efficiently even with 5% of the dataset thanks to a small set of fine-tuned parameters. |

conclusion

We present AVFormer, a lightweight method for adapting existing frozen state-of-the-art ASR models to AV-ASR. Our approach is practical and efficient and achieves impressive zero impact performance. As ASR models become more and more extensive, tuning the entire parameter set of pretrained models becomes impractical (especially for different domains). Our method seamlessly allows for both domain transfer and visual input mixing in the same, parameter-efficient model.

Acknowledgments

This study was conducted by Paul Hongsuk Seo, Arsha Nagrani, and Cordelia Schmidt.

[ad_2]

Source link