[ad_1]

Large-scale models, such as T5, GPT-3, PaLM, Flamingo, and PaLI, have demonstrated the ability to retain significant knowledge when scaled to tens of billions of parameters and trained on large text and image datasets. These models achieve state-of-the-art performance tasks such as image captioning, visual question answering, and open vocabulary recognition. Despite such advances, these models require enormous amounts of data for training and end up with huge numbers of parameters (in many cases billions), leading to significant computational demands. Moreover, the data used to train these models can become outdated, requiring retraining every time the world knowledge is updated. For example, a model trained just two years ago may provide outdated information about the current president of the United States.

Researchers in the fields of natural language processing (RETRO, REALM) and computer vision (KAT) have attempted to address these challenges using retrieval-enhanced models. Typically, these models use a backbone that can process one modality at a time, e.g., text-only or images-only, to encode and retrieve information from the body of knowledge. However, these search-enhanced models cannot use all available modalities in query and knowledge corpora and may not find information that is most useful for generating model output.

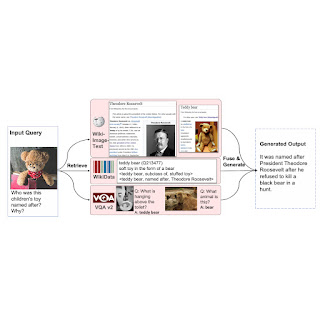

To address these issues, in “REVEAL: Retrieval-Augmented Visual-Language Pre-Training with Multi-Source Multimodal Knowledge Memory,” to appear at CVPR 2023, we present a visual-language model that learns using multi-source multimodal knowledge memory. Modal “memory” for answering knowledge-intensive questions. REVEAL uses neural representation learning to encode and convert diverse sources of knowledge into a memory structure consisting of key-value pairs. Keys serve as indexes of memory elements, and corresponding values store relevant information about those elements. During training, REVEAL learns key embeddings, value tokens, and the ability to retrieve information from that memory to address knowledge-intensive queries. This approach allows model parameters to focus on on-demand reasoning rather than dedicated memorization.

|

| We augment the visual-linguistic model with the ability to retrieve multiple knowledge records from a variety of knowledge sources to help generate. |

Memory construction from multimodal knowledge corpora

Our approach is similar to REALM in that we precompute key and value embeddings of knowledge items from different sources and indexes them into a unified knowledge memory, where each knowledge item is encoded in a key-value pair. Each key is a d-dimensional embedding vector, and each value is a sequence of character embeddings that represent a knowledge element in more detail. Unlike previous works, REVEAL uses a diverse set of multimodal knowledge corpora, including WikiData knowledge graphs, Wikipedia passages and images, web image-text pairs, and visual query response data. Each piece of knowledge can be text, an image, a combination of both (e.g., pages in Wikipedia), or a relation or attribute from a knowledge graph (e.g., Barack Obama is 6′ 2″ tall). During training, we continuously recalculate the memory key and embedding values, When the model parameters are updated We update the memory asynchronously every thousand training steps.

Memory scaling using compression

A naive solution to memory value encoding is to store the entire sequence of tokens for each knowledge item. Then, the model can combine the input request and the top-k fetched memory values by concatenating all their symbols together and feeding them to the transformer encoder-decoder pipeline. This approach has two issues: (1) storing hundreds of millions of knowledge items in memory is impractical if each memory value consists of hundreds of tokens, and (2) the transformer encoder has quadratic complexity with respect to the total number of tokens. K for his attention. Therefore, we propose to use the Perceiver architecture to encode and compress knowledge elements. The Perceiver model uses a transformer decoder to compress the full token sequence to an arbitrary length. This allows us to obtain top-K Memory records K as big as a hundred.

The following figure illustrates the procedure for constructing memory key-value pairs. Each knowledge item is processed by encoding a multimodal visual language, resulting in a sequence of image and text symbols. The key head then transforms these characters into a compact embedded vector. The head of meaning (perceiver) condenses these signs into less, retaining relevant information about the element of knowledge contained in them.

|

| We encode knowledge records from different corpora into single key-value embedding pairs, where the keys are used to index the memory and the values contain information about the records. |

Large-scale prior training on image-text pairs

To train the REVEAL model, we start with a large-scale corpus collected from the public web with three billion image alt-text caption pairs implemented in LiT. Since the dataset is noisy, we add a filter to remove data points with captions shorter than 50 characters, yielding approximately 1.3 billion image caption pairs. We then take these pairs together with the text generation object used in SimVLM to prepare REVEAL. Given the image text example, we randomly select a prefix that contains the first few characters of the text. We give the text prefix and image to the model as input to output the rest of the text. The goal of training is prefix conditioning and autoregressive generation of the remaining text sequences.

REVEAL To make all the components of the model complete, we need to warm up the model to a good state (setting initial values to the model parameters). Otherwise, if we started with random weights (cold start), the retriever would often retrieve irrelevant memory items that would never produce useful learning signals. To avoid this cold start problem, we build an initial search database with pseudo-ground truth knowledge to give pre-training a reasonable start.

We create a modified version of the WIT dataset for this purpose. Each image-caption pair in WIT also has a corresponding Wikipedia passage (the words surrounding the text). We combine the adjacent passage with the query image and use it as pseudo-ground-truth knowledge corresponding to the input query. The passage provides rich information about the image and caption, which is useful for model initialization.

To prevent the model from relying on low-level image features for retrieval, we apply random data augmentation to the input query image. Given this modified data set containing the pseudo-retrieval truth, we train the query and memory key embeddings to initialize the model.

REVEAL workflow

The overall REVEAL workflow consists of four main steps. First, REVEAL encodes the multimodal input into a token embedding sequence along with a condensed query embedding. Then, the model translates each multisource knowledge record into a single key-value embedding pair, where the key is used for memory indexing and the value contains all the information about the record. Next, REVEAL retrieves the upper-K The most connected pieces of knowledge from multiple sources of knowledge return the pre-processed value stored in memory and re-encode the values. Finally, REVEAL combines top-K Distribute knowledge through an attentive knowledge fusion layer by injecting the retrieval score (the dot product between the query and key embeddings) as before in the attention computation. This structure facilitates end-to-end preparation of memory, encoder, retriever and generator simultaneously.

|

| General workflow of REVEAL. |

results

We evaluate REVEAL on knowledge-based visual question-answering tasks using the OK-VQA and A-OKVQA datasets. We adapt our pre-trained model to VQA tasks using the same generative objective, where the model takes an image-reading pair as input and generates a textual response as output. We show that REVEAL achieves better results on the A-OKVQA database than previous attempts that incorporate fixed knowledge or works that use large language models (eg, GPT-3) as an implicit knowledge source.

|

| A visual question that answers the results on the A-OKVQA. REVEAL achieves higher accuracy than previous works, including ViLBERT, LXMERT, ClipCap, KRISP, and GPV-2. |

We also evaluate REVEAL on image caption benchmarks using the MSCOCO and NoCaps datasets. We directly adapt REVEAL to the MSCOCO training split through the cross-entropy generative objective. We measure our performance on the MSCOCO split test and the NoCaps evaluation suite using the CIDEr metric, which is based on the idea that good subtitles should be similar to reference subtitles in terms of word choice, grammar, meaning, and content. Our results for the MSCOCO caption and NoCaps data sets are shown below.

|

| Image captioning results on MSCOCO and NoCaps using CIDER metrics. REVEAL achieves a higher score compared to Flamingo, VinVL, SimVLM and CoCa. |

Below we show some qualitative examples of how REVEAL finds relevant documents to answer visual queries.

|

| REVEAL can use knowledge from a variety of sources to correctly answer a question. |

conclusion

We present an end-to-end retrieval-enhanced visual language (REVEAL) model that incorporates a knowledge retriever that learns to use different knowledge sources in different modalities. We train REVEAL on a massive image-text corpus with four diverse knowledge corpora and achieve state-of-the-art results on knowledge intensive visual question answering and image captioning tasks. In the future, we would like to investigate the attribution ability of this model and apply it to a wider class of multimodal tasks.

Acknowledgments

This study was conducted by Xinyu Hu, Ahmet Ischen, Chen Sun, Zhirui Wang, Kai-Wei Chang, Yizhou Sun, Cordelia Schmidt, David A. Ross and Alireza Fathim.

[ad_2]

Source link