[ad_1]

Authors: Chris Mauk, Jonas Muller

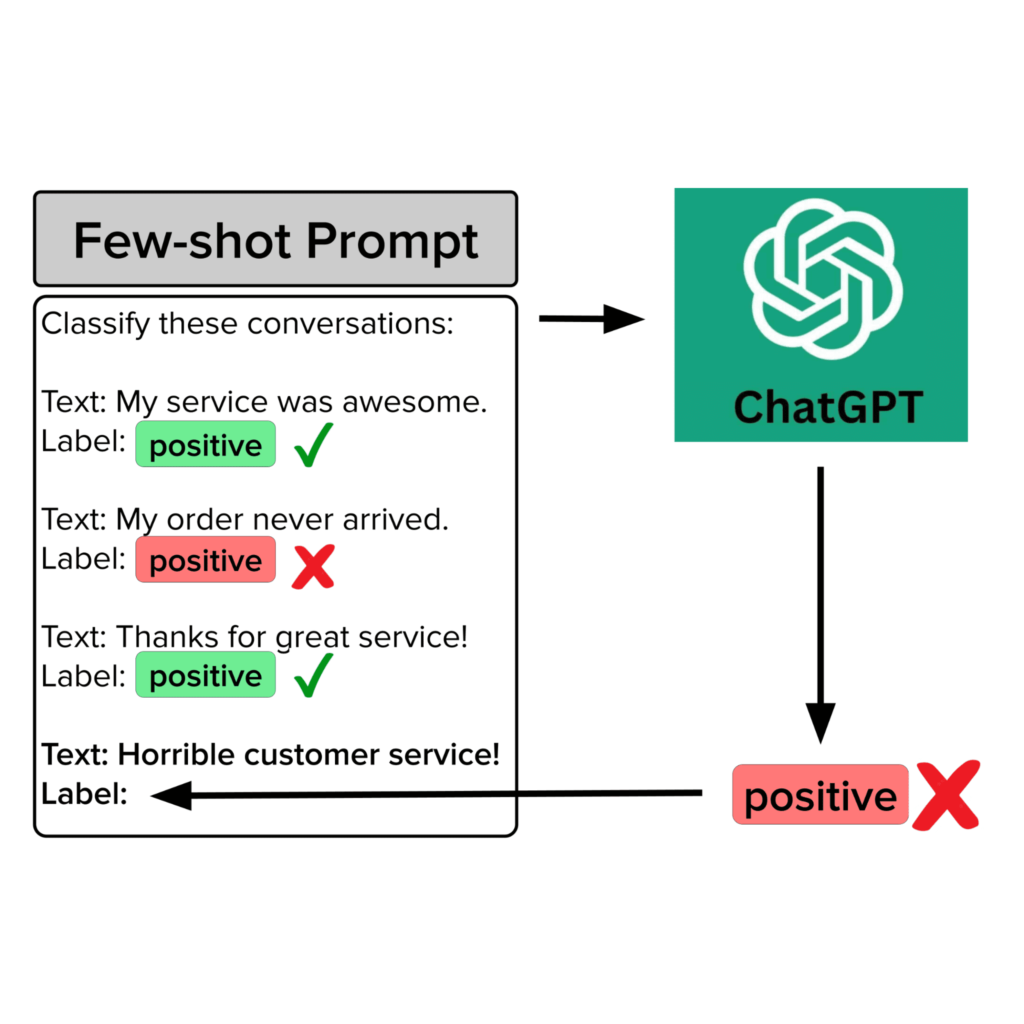

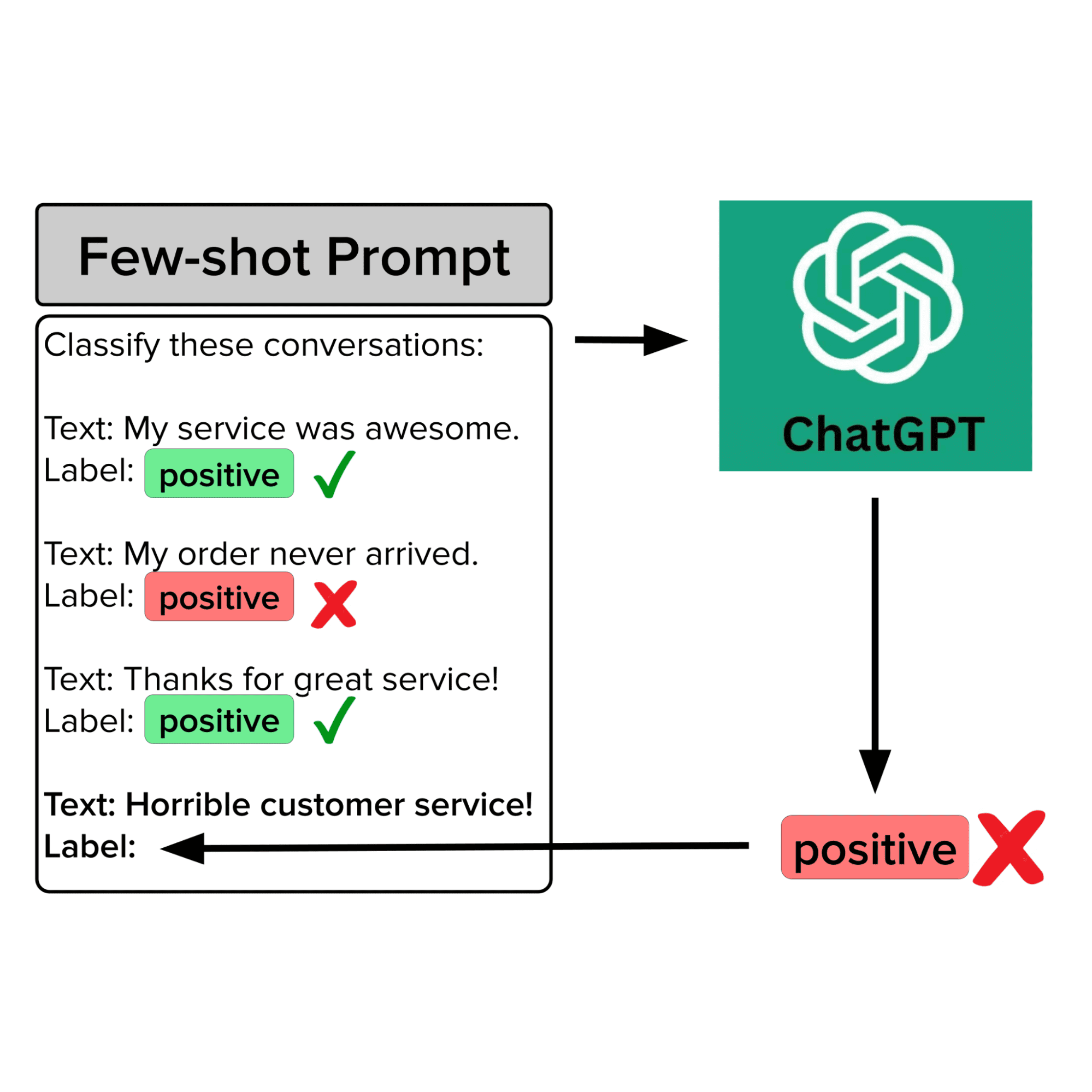

In this article, we propose the Davinci Large Language Model OpenAI (a model based on GPT-3/ChatGPT) for multiple hit requests to differentiate customer service requests in a large bank. Following typical practice, we collect some examples to include in the query template from an available dataset of human-labeled query examples. However, the resulting LLM predictions are unreliable – Closer inspection reveals that this is because real-world data is messy and error-prone. The performance of LLM in this customer service intention classification task is only slightly improved by manually modifying the fast template to reduce potentially noisy data. LLM predictions become significantly more accurate if we instead use data-driven AI algorithms such as Confident learning to provide Only high quality A few sample shots are selected for inclusion in a quick template.

Let’s discuss how we can compile high-quality multiple-frame examples to force LLMs to produce the most reliable predictions. The need to provide high-quality examples in a few hit requests may seem obvious, but many engineers are unaware that there are algorithms/software that can help you do this more systematically (in fact an entire scientific discipline AI in the data center). Such algorithmic data curation has many advantages, namely: fully automated, systematic and widely applicable to general LLM applications beyond intent classification.

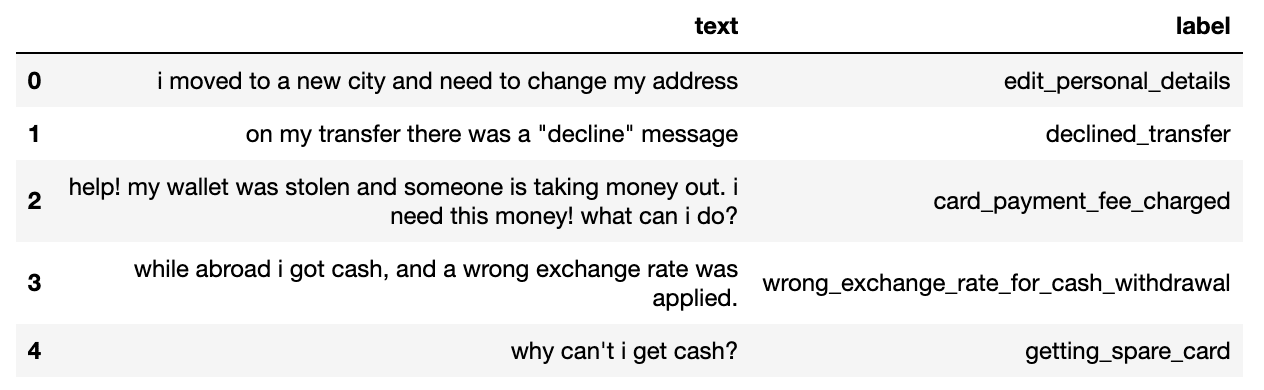

Banking Intent Data Set

This article examines the 50 class variants of the Banking-77 dataset, which contain online banking requirements with their respective objectives ( label shown below). We evaluate the models that predict this label using a fixed test data set containing ~500 phrases, and we have a pool of ~1000 labeled phrases that we consider as candidates for inclusion in several of our examples.

You can download some sample frames and test suite candidate sets here and here. Here is a notebook that you can use to reproduce the results shown in this article.

Call for a few shots

Multiple shot requests (also known as contextual learning) is an NLP technique that allows pre-trained foundation models to perform complex tasks without any explicit training (i.e., model parameter updates). In a multi-hit query, we provide the model with a limited number of input-output pairs as part of the query template included in the query, which is used to instruct the model on how to process a particular input. The additional context provided by a quick template helps the model better infer what type of output is desired. For example, given the input: “Is San Francisco in California?LLM will better understand what type of output is desired if this query is augmented with a fixed template so that the new query looks like this:

Text: Is Boston in Massachusetts?

Etiquette: Yes

Text: Is Denver in California?

Etiquette: No

Text: Is San Francisco in California?

label:

Multiple shot queries are especially useful in text classification scenarios where your classes are domain specific (as is common in customer service applications in various businesses).

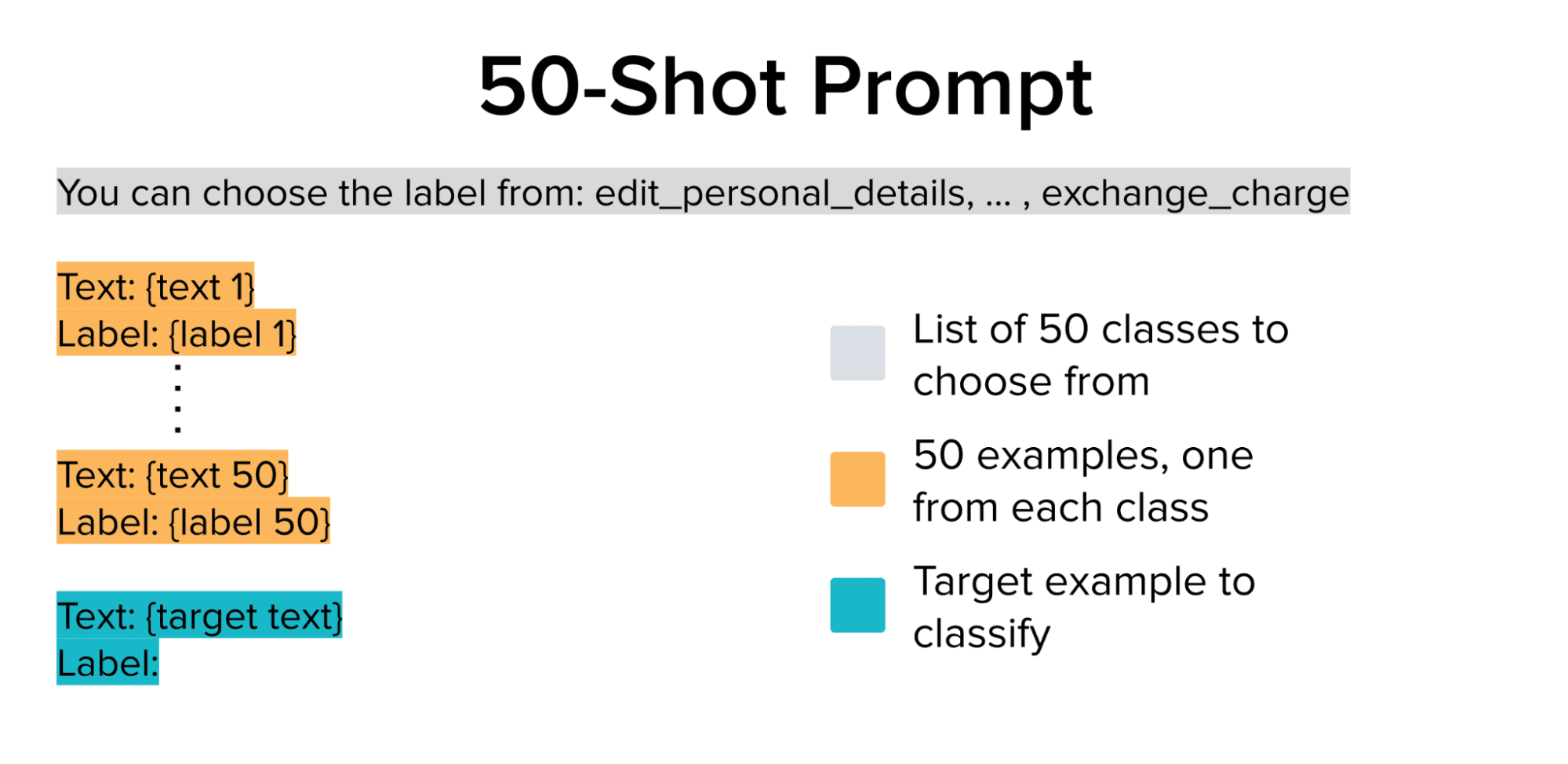

In our case, we have a dataset with 50 possible classes (intents) to provide a context that OpenAI’s pre-trained LLM can learn to distinguish between classes in a context. Using LangChain, we select one random instance from 50 classes (our labeled candidate instances) and build a 50-frame query template. We also add a line that lists the possible classes before the multiple hit examples to ensure that the LLM output is the correct class (ie, the intent category).

The above 50 hit queries are used as input to LLM to classify each instance of the test set (target text above is the only part of this input that changes between different test instances). These predictions are compared to the ground truth labels to evaluate the accuracy of the LLM generated using fast patterns of a few selected hits.

Performance of the base model

# This method handles:

# - collecting each of the test examples

# - formatting the prompt

# - querying the LLM API

# - parsing the output

def eval_prompt(examples_pool, test, prefix="", use_examples=True):

texts = test.text.values

responses = []

examples = get_examples(examples_pool, seed) if use_examples else []

for i in range(len(texts)):

text = texts[i]

prompt = get_prompt(examples_pool, text, examples, prefix)

resp = get_response(prompt)

responses.append(resp)

return responses# Evaluate the 50-shot prompt shown above.

preds = eval_prompt(examples_pool, test)

evaluate_preds(preds, test)

>>> Model Accuracy: 59.6%Running each test instance through LLM with the 50 firing calls shown above, we achieve 59.6% accuracy, which is not bad for a 50-class problem. But this is not quite satisfactory for our bank’s customer service application, so let’s take a closer look at the database of candidate examples (ie, the pool). When ML performs poorly, the data is often to blame!

Issues in our data

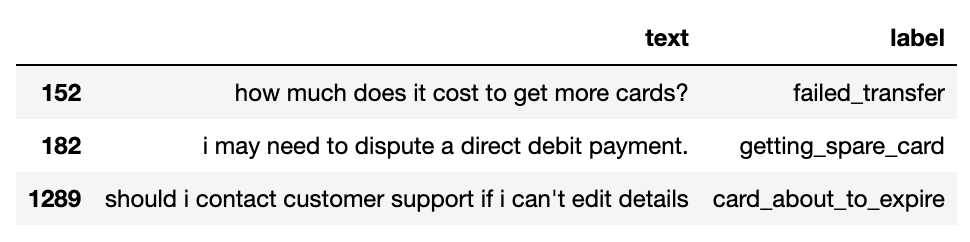

By closely inspecting the candidate pool of examples from which several of our frame requests were drawn, we find mislabeled phrases and outliers. Here are some examples that were clearly annotated incorrectly.

Previous research has shown that many popular datasets contain mislabeled examples because data annotation teams are imperfect.

It is also common for customer service datasets to contain examples that were included by accident. Here we see some strange examples that do not comply with the current requirements of banking customer service.

Why do these issues matter?

As the size of the context of LLMs increases, it is often the case that multiple instances of the query are included. As such, it may not be possible to manually verify every instance in your multiple-hit query, especially with a large number of classes (or if you have no domain knowledge about them). If the data source for some of these examples contains things like the one shown above (as many real-world data sets do), then invalid examples may appear in your queries. The rest of the article discusses the impact of this problem and how we can mitigate it.

Can we warn LLM that the examples can be noisy?

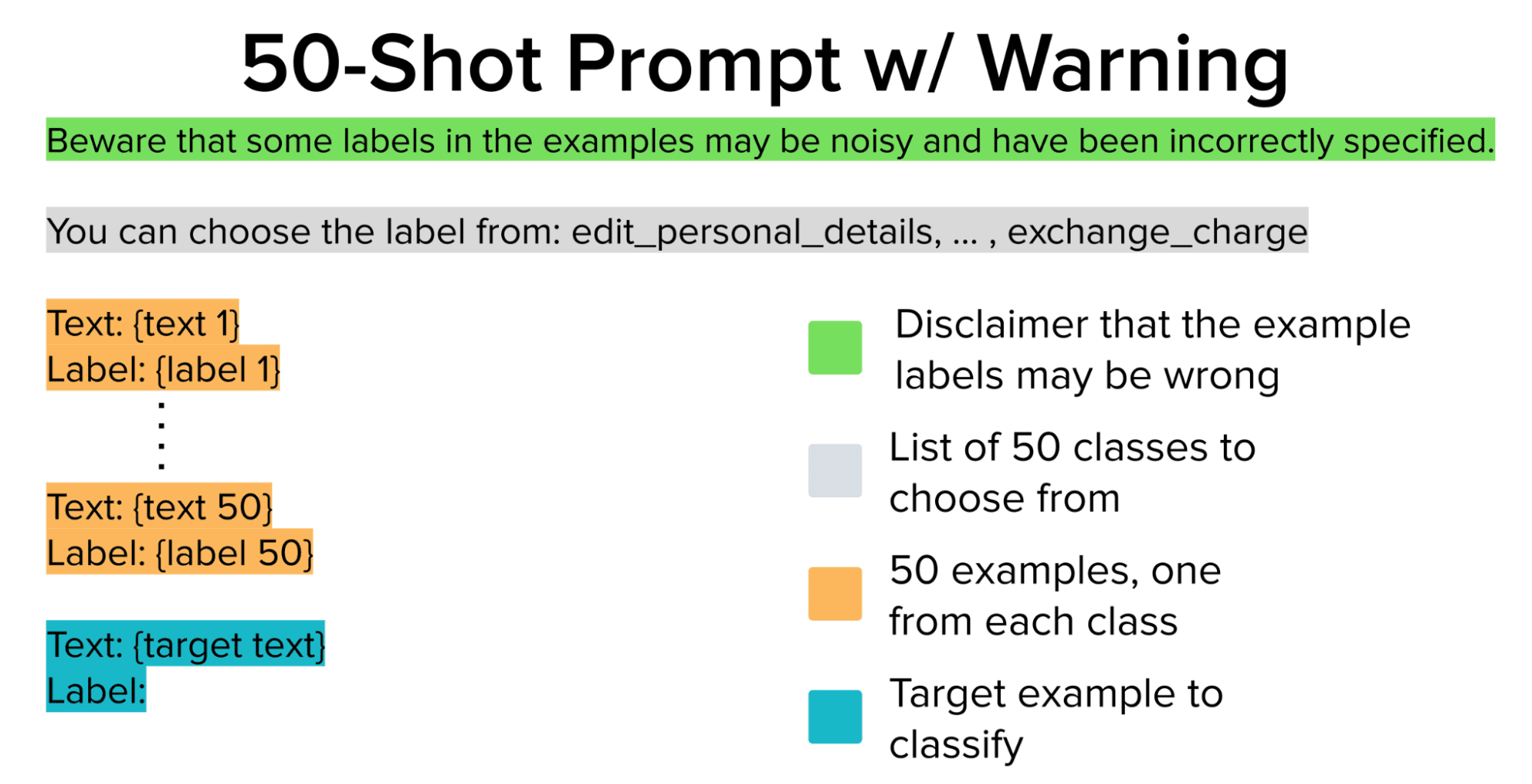

What if we simply include a “disclaimer” in the query that tells LLM that some of the captions in some of the examples provided may be incorrect? Here we discuss the next modification of our quick template, which still contains the same 50-some examples as before.

prefix = 'Beware that some labels in the examples may be noisy and have been incorrectly specified.'

preds = eval_prompt(examples_pool, test, prefix=prefix)

evaluate_preds(preds, test)

>>> Model Accuracy: 62.0%Using the above query, we achieve 62% accuracy. Slightly better, but still not good enough to use LLM for intent classification in our bank’s customer service system!

Can we remove noisy examples entirely?

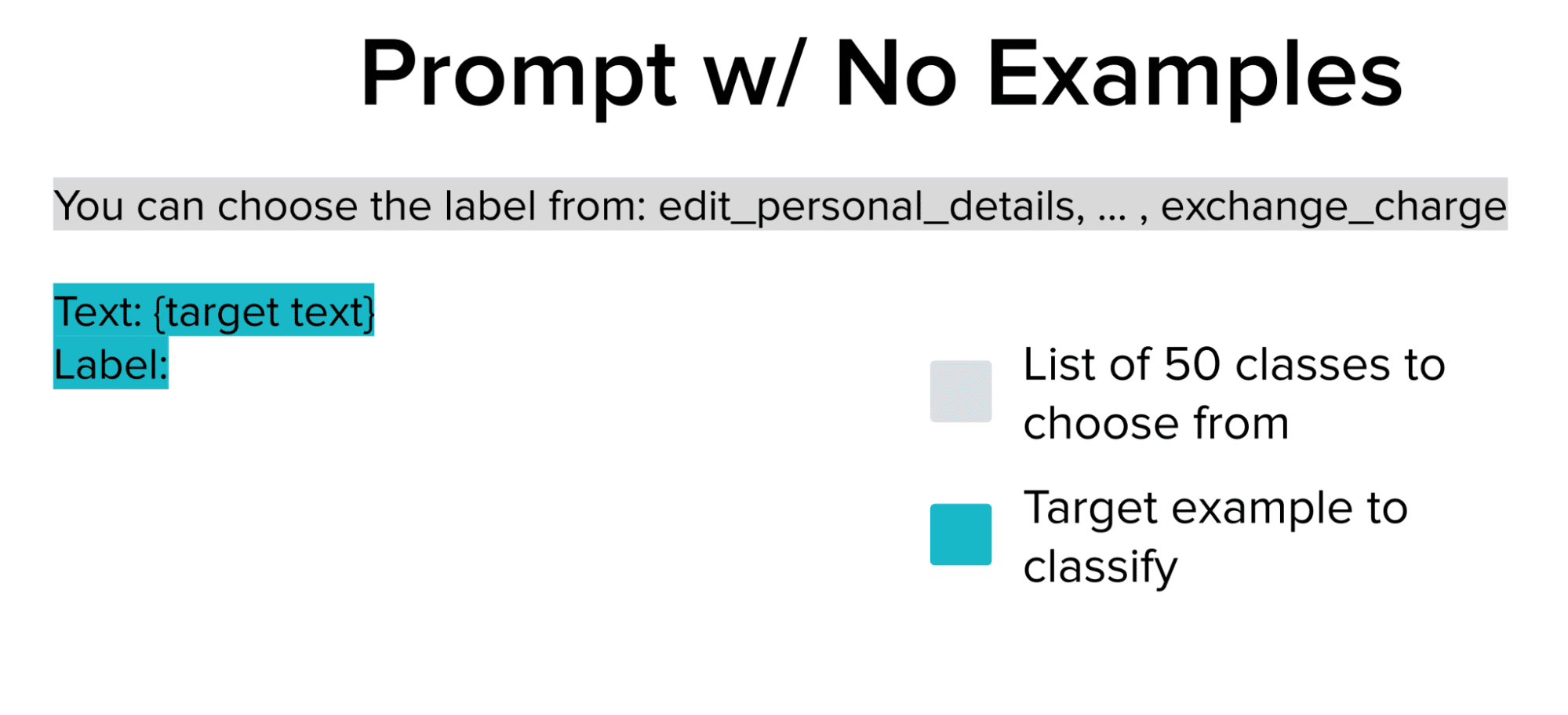

Since we can’t trust the labels to pool a few hit examples, what if we just take them out of the call and just rely on the strong LLM? Instead of asking for multiple shots, we do a zero-shot request, in which the only example included in the request is the one that the LLM has to classify. The zero shot requirement relies entirely on the LLM’s pre-trained knowledge to produce correct results.

preds = eval_prompt(examples_pool, test, use_examples=False)

evaluate_preds(preds, test)

>>> Model Accuracy: 67.4%After completely removing the few bad frame examples, we achieve 67.4% accuracy, which is the best we’ve done so far!

Apparently, the noisy few photographed examples actually can damage Model performance instead of increasing it as they should.

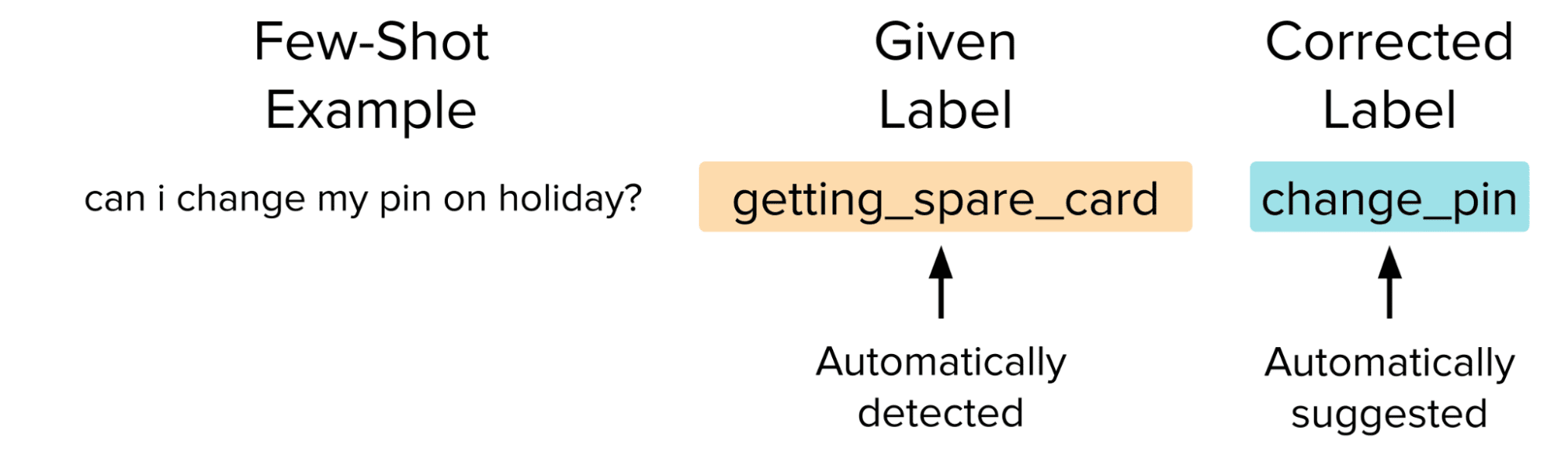

Can we identify and correct noisy examples?

Rather than changing the query or removing examples entirely, a smarter (though more difficult) way to improve our database would be to manually find and fix label problems. This simultaneously removes a noisy data point that harms the model and adds a precise point that should improve its performance by requiring multiple shots, but such corrections are difficult to make manually. Here we clean the data seamlessly using Cleanlab Studio, a platform that implements Confident Learning algorithms to automatically find and fix label issues.

After replacing the putative bad labels with those judged to be more appropriate via Confident Learning, we rerun the original 50-hit query for each test instance in LLM, except this time we use Autocorrect label which ensures that we provide the LLM with 50 high-quality examples in its multiple hit requirements.

# Source examples with the corrected labels.

clean_pool = pd.read_csv("studio_examples_pool.csv")

clean_examples = get_examples(clean_pool)

# Evaluate the original 50-shot prompt using high-quality examples.

preds = eval_prompt(clean_examples, test)

evaluate_preds(preds, test)

>>> Model Accuracy: 72.0%After that, we will reach an accuracy of 72%, which is quite impressive 50 for the class problem.

We have now shown that noisy few-shot examples can significantly degrade the performance of LLM, and it is suboptimal to simply modify the query manually (by adding warnings or removing examples). To achieve the highest performance, you should also try to refine your examples using data-driven AI techniques such as confidence-inspiring learning.

This article highlights the importance of providing fast sampling of reliable multiple frames of language models, specifically focusing on customer service intention classification in the banking domain. By examining a large bank’s customer service inquiry data set and applying a few-hit query technique using Davinci LLM, we encountered challenges arising from noisy and erroneous multiple examples. We have shown that changing the call or just removing examples cannot ensure optimal model performance. Instead, data-driven AI algorithms like Confident Learning, implemented in tools like Cleanlab Studio, have proven more effective at identifying and correcting label problems, resulting in significantly improved accuracy. This study highlights the role of algorithmic data processing in obtaining reliable multiple-shot queries and highlights the utility of such techniques in improving language model performance in various domains.

Chris Mauk is a data scientist at Cleanlab.

[ad_2]

Source link