[ad_1]

Diffusion models have recently emerged as the de facto standard for generating complex, high-dimensional outputs. You may know them for their ability to produce stunning AI art and hyper-realistic synthetic images, but they’ve also found success in other applications such as drug design and continuous control. The main idea behind diffusion models is to iteratively transform random noise into a pattern, such as an image or protein structure. This is typically motivated as a maximum likelihood estimation problem, where a model is trained to produce samples that best match the training data.

However, most use cases for diffusion models are not directly related to matching training data, but rather to a downstream goal. We don’t just want an image that looks like existing images, but one that has a specific type of appearance; We don’t just want a physically plausible drug molecule, but one that is as effective as possible. In this post, we show how diffusion models can be trained on these downstream targets directly using reinforcement learning (RL). To do so, we specify stable diffusion for a variety of purposes, including image compression, human-perceived aesthetic quality, and fast image alignment. The last of these goals uses feedback from a big vision language model to improve the model’s performance on unusual requests, showing how AI models can be used to improve each other without any humans involved.

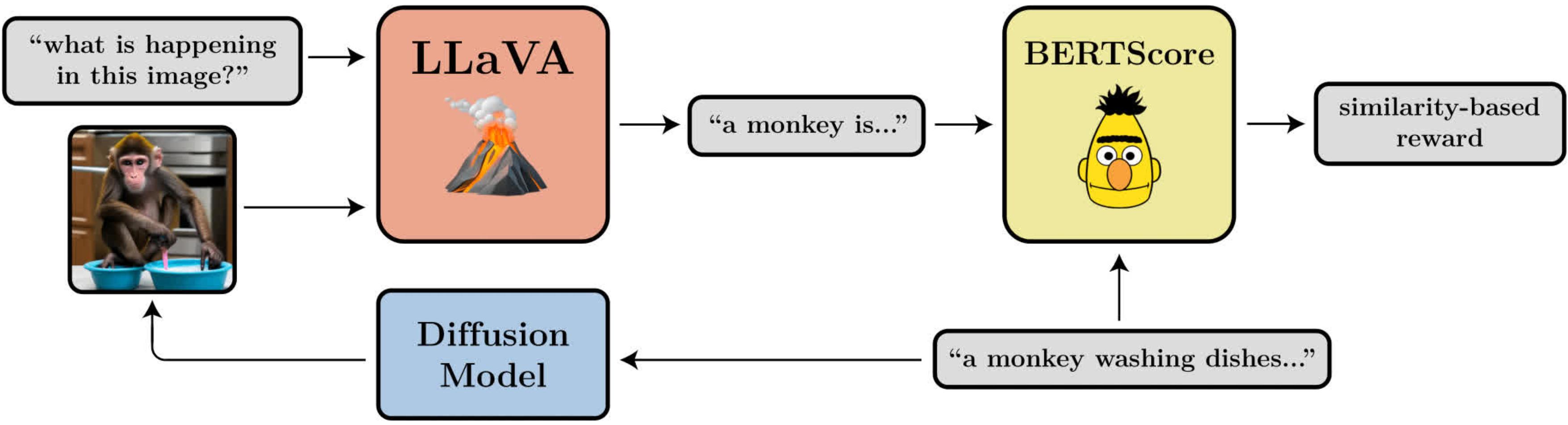

A diagram illustrating the objectives of fast image alignment. It uses LLaVA, a large vision language model, to evaluate the generated images.

Denoising Diffusion Policy Optimization

In turning diffusion into an RL problem, we make only the most basic assumption: given a pattern (eg, an image), we have access to a reward function that we can evaluate and tell us how “good” that pattern is. Our goal is for the diffusion model to generate patterns that maximize this reward function.

Diffusion models are typically trained using a loss function derived from maximum likelihood estimation (MLE), which means they are encouraged to generate samples that make the training data more likely. In the RL setting, we no longer have training data, only samples from the diffusion model and their associated rewards. One way we can still use the same MLE-motivated loss function is to treat the samples as training data and incorporate the rewards by weighting the loss of each sample according to its reward. This gives us an algorithm we call reward-weighted regression (RWR), following existing algorithms from the RL literature.

However, there are several problems with this approach. One is that RWR is not a particularly accurate algorithm—it maximizes the reward only approximately (see Nair et. al., Appendix A). The MLE-inspired loss for diffusion is also not exact and is instead obtained by applying a variance restriction to the true probability of each sample. This means that RWR maximizes the reward through two levels of approximation, which in our opinion significantly hurts its performance.

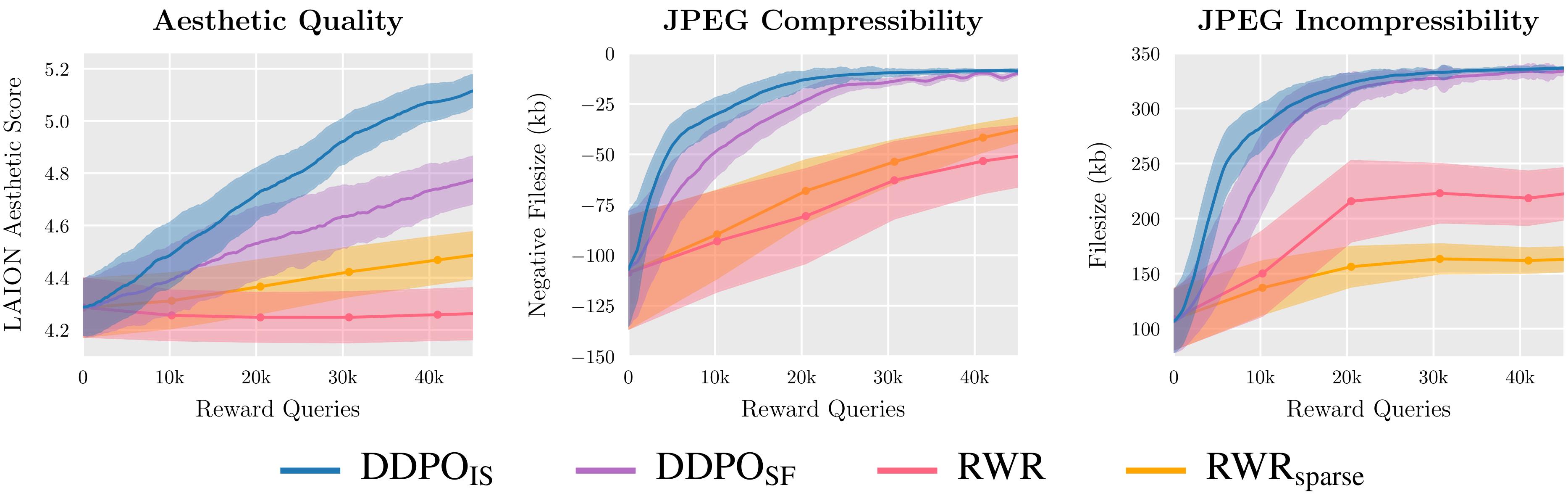

We evaluate two variants of DDPO and two variants of RWR with three reward functions and find that DDPO consistently achieves the best performance.

The main insight of our algorithm, which we call Denoising Diffusion Policy Optimization (DDPO), is that we can better maximize the reward of the final sample if we pay attention to the entire sequence of denoising steps that got us there. To do this, we recast the diffusion process as a multistage Markov decision process (MDP). In MDP terminology: each denoising step is an action, and the agent receives a reward at the last step of each denoising trajectory only when the final sample is produced. This framework allows us to use many powerful algorithms from the RL literature designed specifically for multilevel MDPs. Instead of using the estimated probability of the final sample, these algorithms use the exact probability of each noise step, which is very easy to calculate.

We chose to use policy gradient algorithms because of their ease of implementation and past success in language model refinement. This has resulted in two variants of DDPO: DDPOSF, which uses a simple score function estimator of the policy gradient, also known as REINFORCE; and DDPOis, which uses a more robust value sample estimator. DDPOis is our best performing algorithm and its implementation closely follows that of Proximal Policy Optimization (PPO).

Specifying steady-state diffusion using DDPO

For our main results, we specify steady-state diffusion using the v1-4 DDPOis. We have four tasks, each defined by a different reward function:

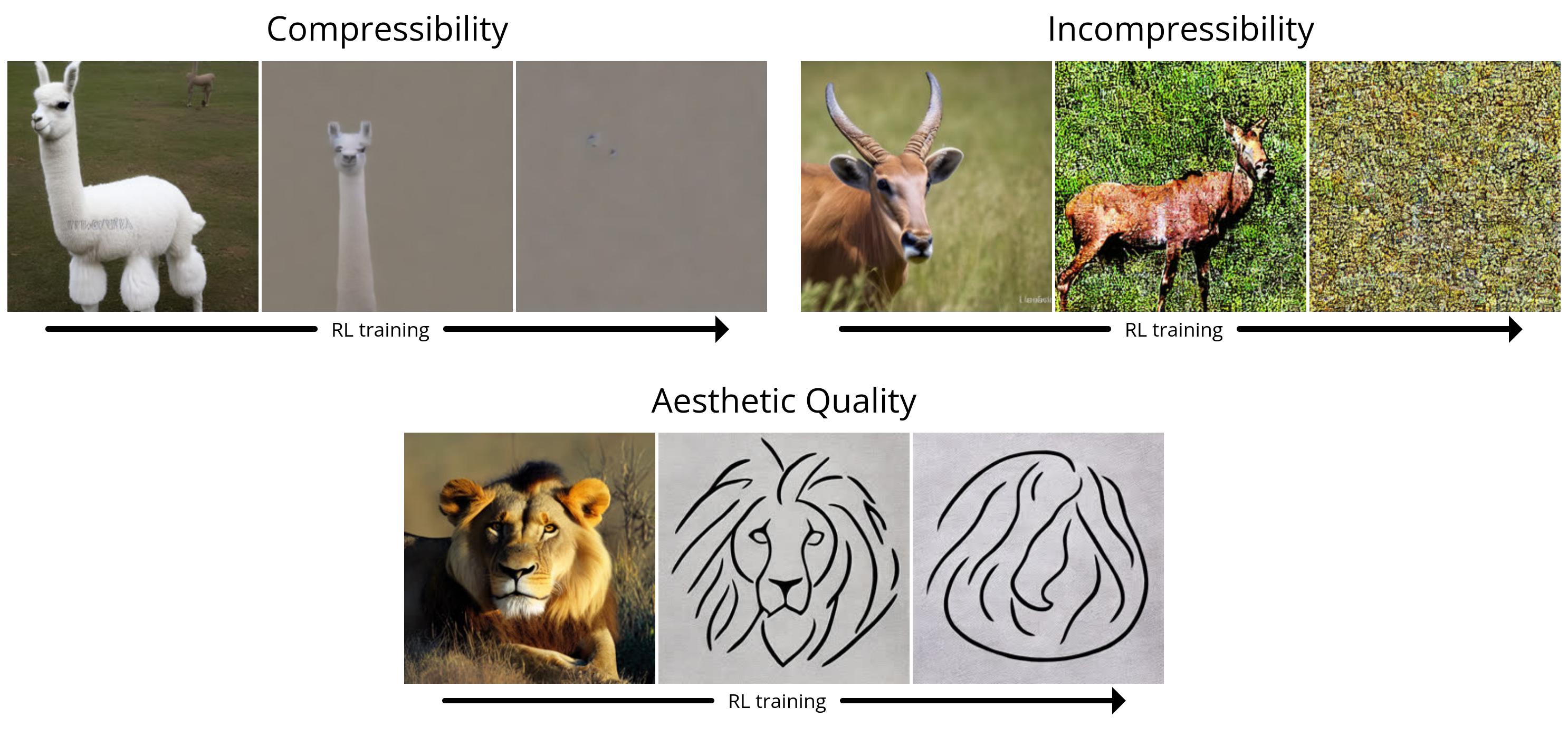

- Compressibility: How easy is it to compress an image using the JPEG algorithm? The reward is the negative file size (KB) of the image when saved in JPEG format.

- Compression: How hard is it to compress an image using the JPEG algorithm? The reward is the positive file size (KB) of the image when saved as a JPEG.

- Aesthetic Quality: How aesthetically pleasing is the image to the human eye? The award is the result of LAION aesthetic prediction, which is a neural network trained on human preferences.

- Prompt-Image Alignment: How well does the image represent what is asked for in the prompt? It’s a bit more complicated: we feed the image to LLaVA, ask it to describe the image, and then calculate the similarity between the description and the original query using BERTScore.

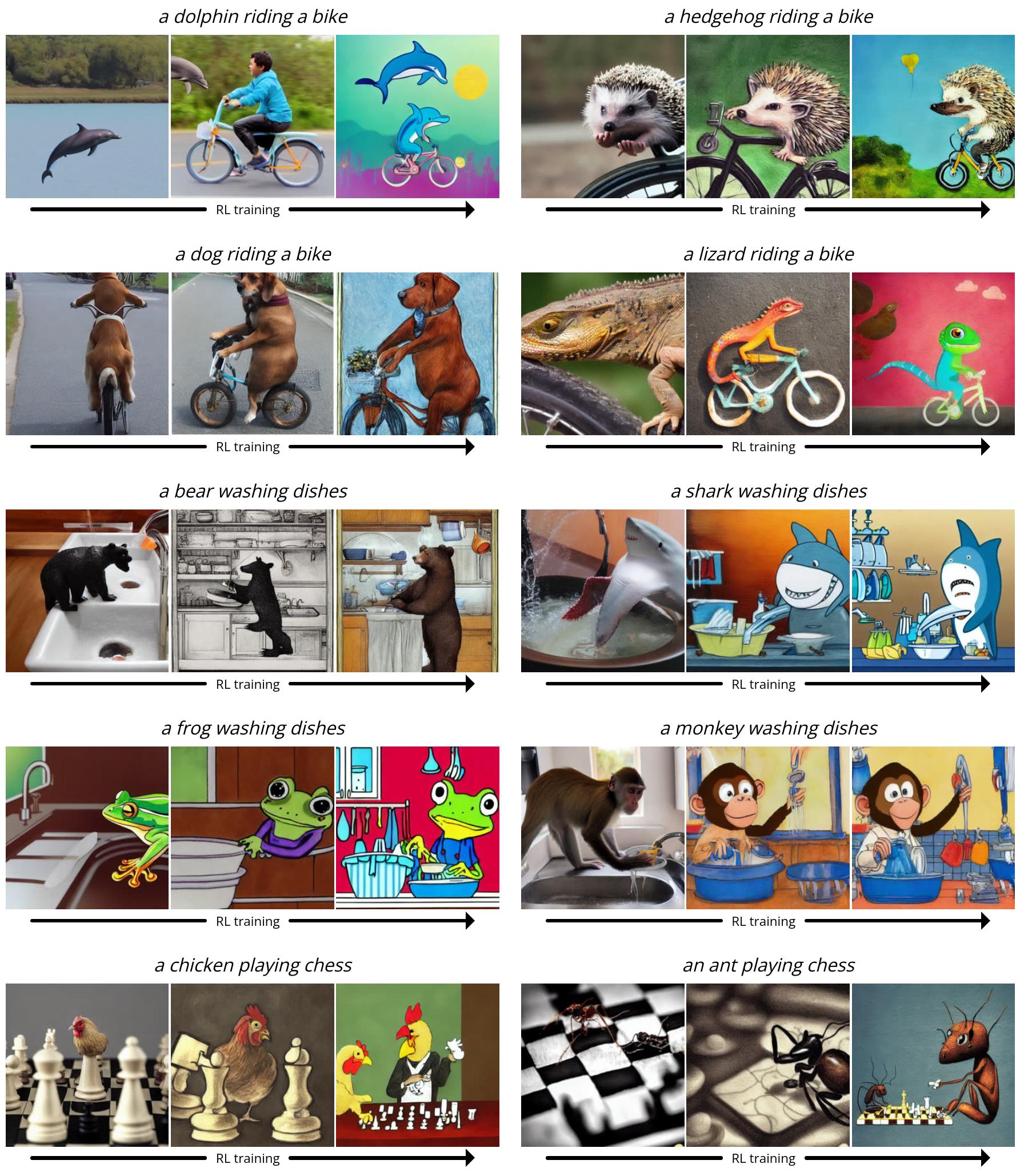

Since steady diffusion is a text image model, we also need to select a set of queries to feed it during refinement. For the first three tasks, we use simple form requirements “a(n) [animal]”. For quick image alignment, we use a shape query “a(n) [animal] [activity]”where there are activities “washing the dishes”, “Chess Game”and “ride a bike”. We found that Stable Diffusion often struggled to produce images that matched the demands of these unusual scenarios, leaving plenty of room for improvement by fine-tuning the RL.

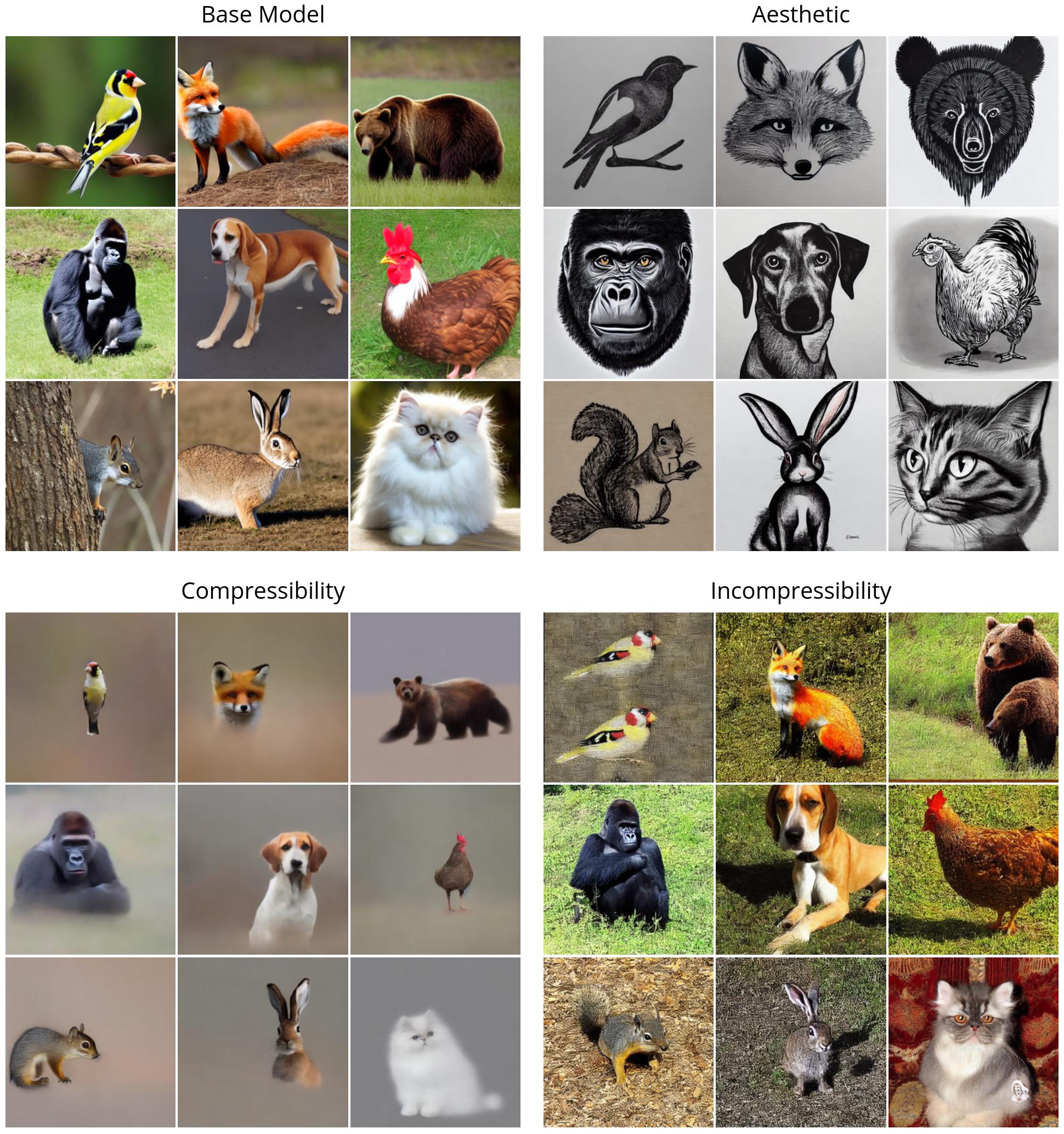

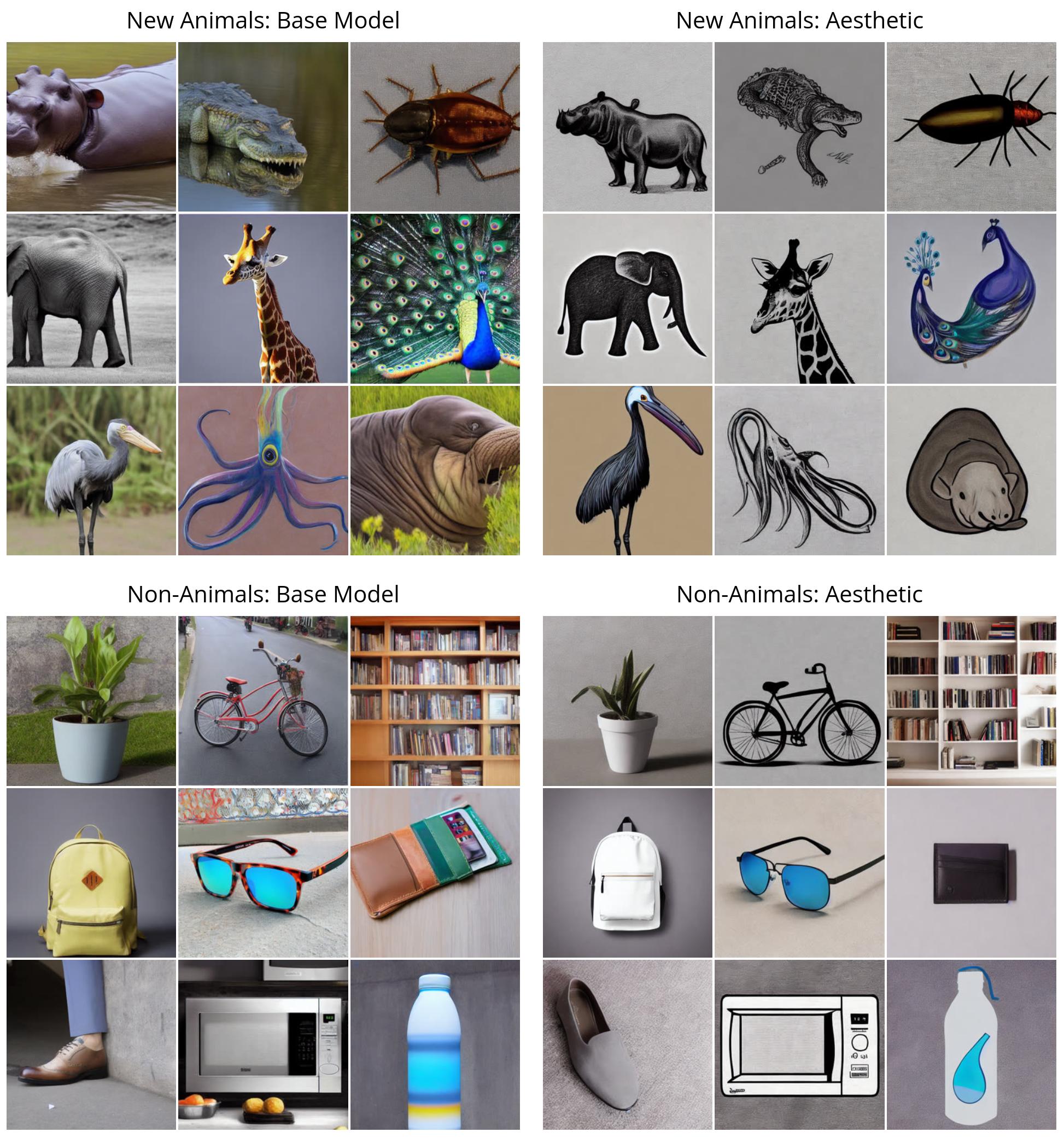

First, we demonstrate the performance of DDPO on simple rewards (compression, incompressibility, and aesthetic quality). All images are generated with the same random seed. In the top left quadrant, we show what steady diffusion of “vanilla” produces for nine different animals; All RL-refined models show a clear qualitative difference. Interestingly, the aesthetic quality model (top right) tends towards minimalistic black-and-white line drawings, revealing the kind of images that the LAION aesthetic predictor considers “more aesthetically pleasing”.

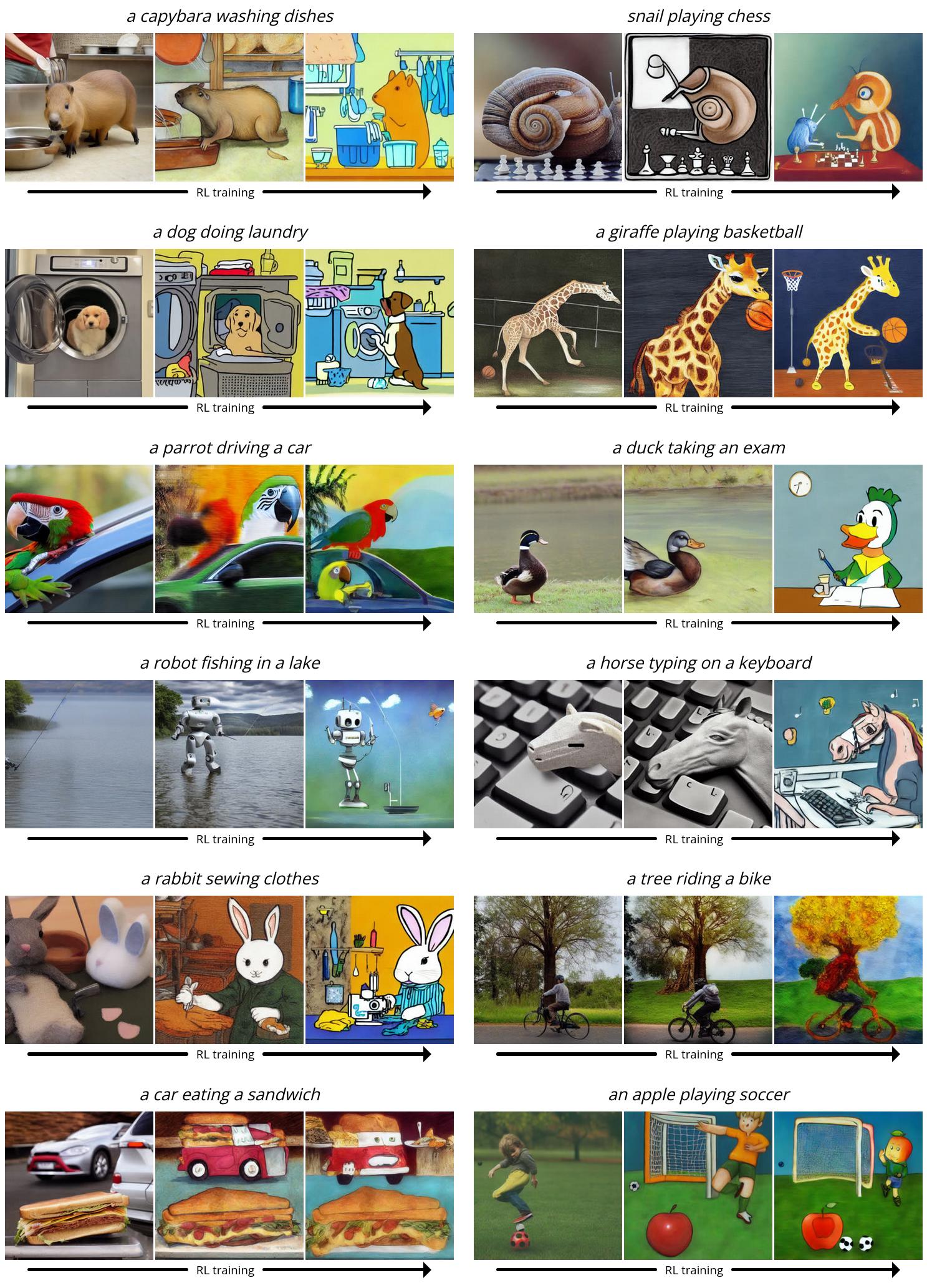

Next, we demonstrate DDPO on a more challenging fast image alignment task. Here we show some snapshots from the training process: each series of three images shows patterns for the same fast and random seed over time, the first pattern coming from the steady diffusion of vanilla. Interestingly, the model goes for a more cartoonish style, which was not intentional. We hypothesize that this is because animals performing human-like activities are more likely to appear in a cartoon-like style in the pretraining data, so the model switches to this style to more easily adapt to the demand to use what it already knows.

An unexpected generalization

It turns out that surprising generalizations arise when refining large language models with RL: for example, models refined according to instructions in English only. Often improves on other languages. We find that the same phenomenon occurs with text-to-image diffusion models. For example, our aesthetic quality model was specified using a query selected from a list of 45 common animals. We find that it is generalized not only to invisible animals but also to everyday objects.

Our fast image alignment model used the same list of 45 normal animals during training and only three activities. We find that it generalizes not only to invisible animals, but also to invisible activities, and even to novel combinations of the two.

Over optimization

It is known that refinement of the reward function, especially when learned, can lead to overoptimization of the reward when the model uses the reward function to achieve a high reward in a non-beneficial way. Our setting is no exception: in all tasks, the model eventually destroys any image content in order to maximize the reward.

We also found that LLaVA is susceptible to typographical attacks: when optimizing against form requirements. “[n] animals”DDPO was able to successfully trick LLaVA by generating text similar to the correct number instead.

There is currently no general-purpose method to avoid overoptimization, and we highlight this problem as an important area for future work.

conclusion

Diffusion models are hard to beat when it comes to producing complex, high-dimensional results. However, so far they have mostly been successful in applications where the goal is to learn patterns from lots and lots of data (for example, image caption pairs). What we’ve found is a way to efficiently train diffusion models that goes beyond pattern matching—and without the need for the necessary training data. The possibilities are only limited by the quality and creativity of your award feature.

The way we use DDPO in this work is inspired by recent successes in language model refinement. OpenAI’s GPT models, such as Stable Diffusion, will be trained on vast amounts of the Internet for the first time; These are then refined with RL to produce useful tools such as ChatGPT. Typically, their reward function learns from human preferences, but others have more a little while ago Find out how to build powerful chatbots using AI feedback based reward features. Compared to the chatbot mode, our experiments are small-scale and limited. But given the enormous success of this “advance + refinement” paradigm in language modeling, it is certainly worth pursuing further in the world of diffusion models. We hope that others will be able to improve our work on large diffusion models, not only for text-to-image generation, but also for many interesting applications such as video generation, music generation, image editing, protein synthesis, robotics, and more.

Furthermore, the “pre-training + refinement” paradigm is not the only way to use DDPO. As long as you have a good reward function, there’s nothing to stop you from training with RL from the start. Although this setting is still unexplored, this is where DDPO’s strengths really shine. Pure RL has long been used for a wide range of domains, from gaming to robotic manipulation, nuclear fusion, and chip design. Adding the powerful expressiveness of diffusion models to the mix has the potential to take existing RL applications to the next level—or even discover new ones.

This post is based on the following paper:

If you want to learn more about DDPO, you can check out the paper, the website, the original code, or get the weight of the model at Hugging Face. If you want to use DDPO in your project, check out my PyTorch + LoRA implementation, where you can specify stable diffusion with less than 10GB of GPU memory!

If DDPO inspires your work, please credit it:

@miscblack2023ddpo,

title=Training Diffusion Models with Reinforcement Learning,

author=Kevin Black and Michael Janner and Yilun Du and Ilya Kostrikov and Sergey Levine,

year=2023,

eprint=2305.13301,

archivePrefix=arXiv,

primaryClass=cs.LG

[ad_2]

Source link