[ad_1]

Figure 1: Stepwise behavior in self-supervised learning. When training common SSL algorithms, we find that the loss decreases incrementally (top left) and the learned embeddings iteratively increase in dimension (bottom left). Direct visualization of the embeddings (right; top three PCA directions shown) confirms that the embeddings initially collapse to a point that then expands to 1D diversity, 2D diversity, and beyond with loss steps.

It is widely believed that the stunning success of deep learning is due in part to its ability to discover and extract useful representations from complex data. Self-supervised learning (SSL) has emerged as a leading framework for learning these representations for images directly from unlabeled data, just as LLMs learn language representations directly from web-written text. Yet despite the central role of SSL in recent models such as CLIP and MidJourney, fundamental questions such as “What do self-monitoring imaging systems actually learn?” and “How does this learning actually occur?” Lack of basic answers.

Our latest paper (to be presented at ICML 2023) presents what we propose The first convincing mathematical picture of the learning process of large-scale SSL methods. Our simplified theoretical model, which we explain precisely, learns aspects of the data in a series of discrete, well-separated steps. We then show that this behavior can be observed in the wild for many modern systems. This discovery opens up new ways to improve SSL methods and provides a range of new scientific questions, the answers to which will provide a powerful lens for understanding some of the most important deep learning systems today.

background

We focus here on jointly embedded SSL methods—a superset of contrastive methods—that learn representations obeying view invariance criteria. The loss function of these models includes a term that implements semantically equivalent “views” of the image to match embeddings. Remarkably, this simple approach provides powerful representations of imaging tasks even when scenes are as simple as random crops and color clutter.

Theory: Stepwise Learning in SSL with Linear Models

We first describe an exactly solvable linear model of SSL in which learning trajectories and final embeddings can be written in closed form. Notably, we find that representation learning is divided into discrete steps: the rank of the embeddings starts small and increases iteratively during the stepwise learning process.

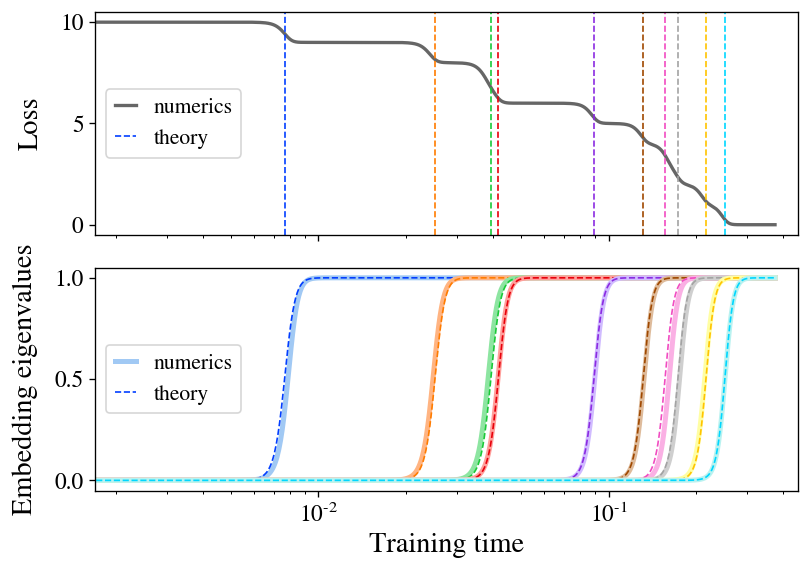

The main theoretical contribution of our paper is the exact solution of the learning dynamics of the Barlow Twins loss function under gradient flow for the special case of the linear model \(\mathbff(\mathbfx) = \mathbfW \mathbfx\) . To outline our conclusions here, we find that when the initialization is small, the model learns representations composed of exactly the top-\(d\) eigendirections. exceptionally cross correlation matrix \(\boldsymbol\Gamma \equiv \mathbbE_\mathbfx,\mathbfx’ [ \mathbfx \mathbfx’^T ]\). Moreover, we find that these eigendirections are studied once with a sequence of discrete training steps at time determined by their respective eigenvalues. Figure 2 illustrates this learning process, showing both a new directional increase in the representation function and a consequent drop in loss at each learning step. As an added bonus, we find a closed-form equation for the final embeddings learned by the model during convergence.

Figure 2: Stepwise learning seen in the linear model of SSL. We fit a linear model with loss of Barlow Twins on a small sample of CIFAR-10. The loss (top) descends in the form of stairs, with step times well predicted by our theory (dashed lines). The fitted eigenvalues (bottom) appear at one time, closely matching theory (dashed curves).

Our discovery of gradual learning is a manifestation of a broader concept Spectral bias, it is an observation that many learning systems with approximately linear dynamics preferentially learn eigendirections with higher eigenvalues. This has recently been well studied in the case of standard supervised learning, where higher eigenvalue modules were found to be learned faster during training. Our work finds similar results for SSL.

The reason why the linear model deserves careful study is that, as shown by the line of work of the “neural tangent kernel” (NTK), sufficiently large neural networks also have linear parametric dynamics. This fact is sufficient to extend our solution for the linear model to large neural networks (or indeed arbitrary kernel machines), in which case the model preferentially learns the upper \(d\) eigendirections of a particular operator associated with NTK. The NTK study provided many insights into the training and generalization of nonlinear neural networks, suggesting that some of our insights may be transferable to realistic cases.

Experiment: Stepwise Learning in SSL with ResNets

As our main experiment, we train several leading SSL methods with full-scale ResNet-50 encoders and find that, remarkably, we clearly see this stepwise learning pattern even in a realistic environment, suggesting that this behavior is central to the learning behavior of SSL.

To see stepwise learning with ResNets in a realistic setting, all we need to do is run the algorithm and track the eigenvalues of the embedded covariance matrix over time. In practice, it helps to emphasize stepwise behavior, as well as to practice initializing with smaller parameters than usual and practice with smaller learning rates, so we will use these modifications in the experiments we discuss here and consider the standard case. our paper.

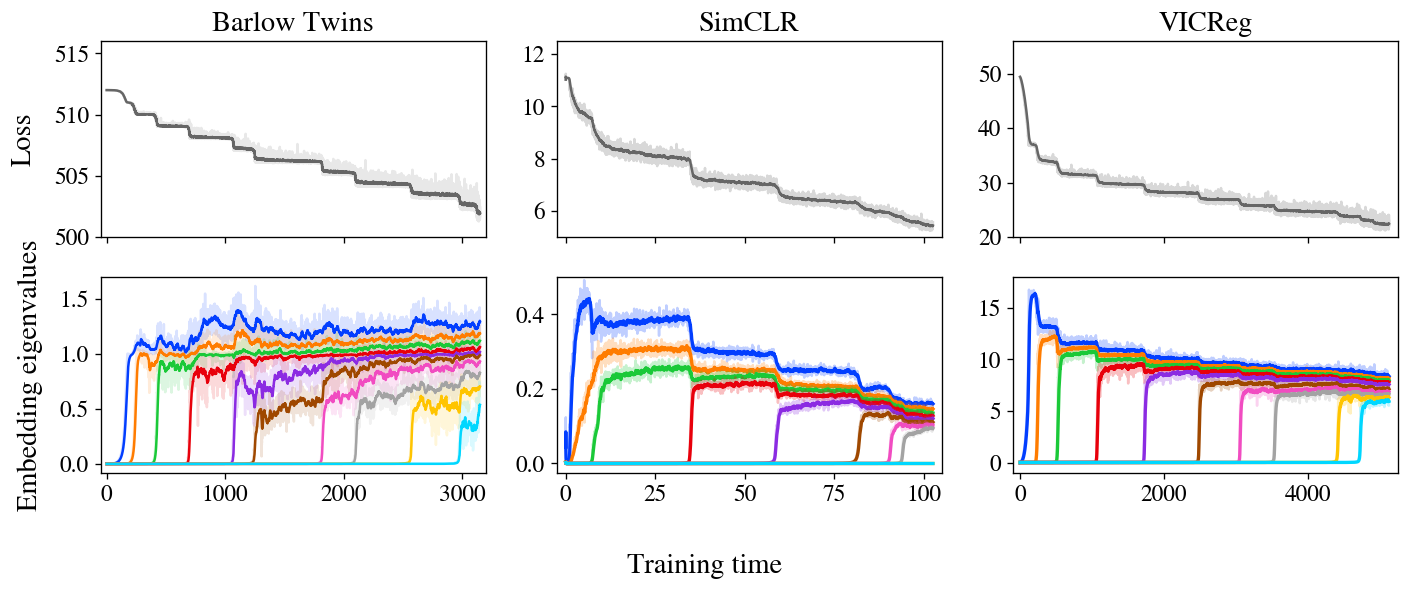

Figure 3: Stepwise learning evident in Barlow Twins, SimCLR and VICReg. Loss and embedding in all three methods show stepwise learning, with embeddings increasing in rank iteratively, as predicted by our model.

Figure 3 shows the loss and covariance eigenvalues for three SSL methods—Barlow Twins, SimCLR, and VICReg—trained on the STL-10 dataset with standard augmentation. It is worth noting All three show very clear gradual learning, The loss decreases in a stair curve, and at each successive step one new eigenvalue emerges from zero. We also show an animated zoom in on the early steps of the Barlow Twins in Figure 1 .

It’s worth noting that even though these three methods look quite different at first glance, folklore has long suspected that they do something similar under the hood. In particular, this and other co-embedded SSL methods all achieve similar performance in benchmark tasks. Therefore, the challenge is to identify the common behavior underlying these diverse methods. Many previous theoretical works have focused on analytical similarity in their loss functions, but our experiments suggest a different unifying principle: SSL methods all learn embeddings in one dimension, iteratively adding new dimensions to distinguish them.

In a final initial but promising experiment, we compare real embeddings learned by these methods with theoretical predictions computed from NTK after training. Not only do we find good agreement between theory and experiment in each method, but we also compare the methods and find that different methods learn similar embeddings, which adds further support to the notion that these methods ultimately do similar things and may be unified.

Why does it matter?

Our work provides a basic theoretical picture of the process by which SSL methods accumulate learned representations during training. Now that we have a theory, what do we do with it? We see the promise of this image to facilitate the practice of SSL from an engineering perspective and allow for a better understanding of SSL and potentially representational learning more broadly.

In practical terms, SSL models train quite slowly compared to supervised training, and the reason for this difference is unknown. Our picture of training suggests that SSL training takes a long time to converge because the later eigenmodes have long time constants and take a long time to grow significantly. If this picture is correct, speeding up training would be as simple as selectively focusing the gradient on small embedded eigendirections to make them level with the others, which could in principle be done with just a simple modification of the loss function or optimizer. We will discuss these possibilities in more detail in our paper.

On the scientific side, framing SSL as an iterative process allows one to ask many questions about individual eigenmodes. Is learning earlier more useful than later? How do different enhancements change the learned modes and does it depend on the particular SSL method? Can we assign semantic content to any (subset) of the proper modes? (For example, we’ve noticed that the first few modes learned sometimes represent highly interpretable features like the average hue and saturation of an image.) If other forms of representational learning match similar representations—a fact that’s easy to test—then the responses to them. The questions may have implications for deep learning more broadly.

All in all, we are optimistic about the prospects for future work in this area. Deep learning remains a great theoretical mystery, but we believe that our findings here provide a useful foundation for further studies on the learning behavior of deep networks.

This post is based on the paper On the Gradual Nature of Self-Supervised Learning, a joint work by Maksis Knutins, Liu Ziyin, Daniel Geisz, and Joshua Albrecht. This work was conducted with Generally Intelligent, where Jamie Simon is a researcher. This blog post is cross-posted here. We will be happy to address your questions or comments.

[ad_2]

Source link