[ad_1]

Segmentation, the process of identifying image pixels that belong to objects, is at the core of computer vision. This process is used in applications from scientific imaging to photo editing, and technical experts must possess both highly skilled capabilities and access to AI infrastructure for accurate modeling with large amounts of annotated data.

Meta AI just released a Segment Anything project? Which ?image segmentation dataset and model with Segment Anything Model (SAM) and SA-1B mask dataset?—?the largest segmentation dataset supports further research in computer vision foundation models. They have made the SA-1B available for research use, and SAM is licensed under the Apache 2.0 Open License so anyone can try SAM with your images using this. demo!

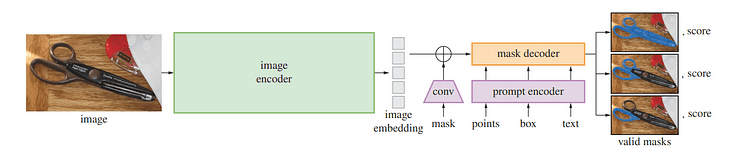

Segment Anything Model / Image by Meta AI

Previously, segmentation problems were approached using two classes of approaches:

- Interactive segmentation in which users guide the segmentation task by iterative mask refinement.

- Automatic segmentation allowed selective object categories such as cats or chairs to be segmented automatically, but it required a large number of annotated objects for training (i.e., thousands or even tens of thousands of segmented cat examples) and computational resources and technical expertise to train the segmentation model. Neither approach provides a general, fully automatic solution to segmentation.

Sam Uses both interactive and automatic segmentation in one model. The proposed interface allows for flexible usage, enabling a wide range of segmentation tasks by engineering appropriate prompts (such as clicks, boxes or text).

SAM was developed using an extensive, high-quality dataset containing more than a billion masks collected as part of this project, giving it the ability to generalize to new types of objects and images than those observed during training. As a result, practitioners no longer need to collect segmentation data and fit the model specifically to their use case.

These capabilities allow SAM to generalize across both tasks and domains, something no other image segmentation software has done before.

SAM has powerful capabilities that make the segmentation task more efficient:

- Variety of input requests: Indicates that direct segmentation allows users to easily perform various segmentation tasks without additional training requirements. You can apply segmentation using interactive points and boxes, automatically segment everything in the image and create multiple valid masks for ambiguous queries. In the figure below, we can see that segmentation is done using the input text query for certain objects.

Limiting a field using a text query.

- Integration with other systems: SAM can receive input requests from other systems, such as capturing the user’s gaze from an AR/VR headset and selecting objects in the future.

- Expandable output: Output masks can be input functions for other AI systems. For example, object masks can be tracked in videos, incorporated into image editing applications, lifted into 3D space, or even used creatively, such as collating

- zero generalization: SAM has developed an understanding of objects that allows it to quickly adapt to strangers without additional training.

- Generation of many masks: SAM can generate multiple valid masks when faced with segmented object ambiguity, which provides significant assistance in real-world segmentation solutions.

- Real-time mask generation: SAM can generate a segmentation mask for any query in real-time after precomputing the image embedding, allowing real-time interaction with the model.

SAM Model Overview / Image by Segment Anything

One of the recent advances in natural language processing and computer vision has been foundational models that allow zero- and few-hit learning by “requesting” new data sets and tasks. Meta AI researchers trained SAM to return a valid segmentation mask for any query, such as foreground/background points, rough boxes/masks or masks, free-form text, or any information that points to a target object within an image.

A valid mask simply means that even when a query can refer to multiple objects (for example: a dot on a shirt can be both itself and someone wearing it), its output must be a reasonable mask for only one object?—? – Model training and solving general downstream segmentation tasks via query.

The researchers noted that the pre-training tasks and interactive data collection imposed specific constraints on the model design. Most importantly, real-time simulation must run CPU-efficiently in a web browser to allow annotators to use SAM interactively for efficient real-time annotation. Although run-time constraints led to trade-offs between quality and run-time constraints, simple designs yielded satisfactory results in practice.

Under the hood of SAM, an image encoder creates a one-time embedding of images, and a lightweight encoder converts any request into an embedding vector in real time. These information sources are then combined with a lightweight decoder that predicts segmentation masks based on image embeddings computed by SAM, so that SAM can produce segments in just 50 milliseconds for any given request in a web browser.

Building and training a model requires access to a vast and diverse pool of data that did not exist at the beginning of training. Today’s segmentation data release is the largest to date. Annotators used SAM to interactively annotate images before updating SAM with this new data?—?This cycle was repeated many times to continuously refine the model and dataset.

SAM makes collecting segmentation masks faster than ever, with only 14 seconds per interactively annotated mask; This process is only twice as slow as annotating bounding boxes, which take only 7 seconds using fast annotation interfaces. Comparable large-scale segmentation data collection efforts include fully manual annotation of COCO’s polygon-based mask, which takes about 10 hours; SAM model-assisted annotation efforts were even faster; Its annotation time per annotated mask was 6.5x faster versus 2x slower in terms of data annotation time than previous model-assisted large-scale data annotation attempts!

Interactive annotation of masks is insufficient to generate the SA-1B dataset; Thus the data engine was created. This data engine contains three “passes”, starting with auxiliary annotation, before moving on to fully automated annotation with auxiliary annotation to increase the variety of masks collected, and finally to fully automated mask generation for the dataset.

The final SA-1B dataset contains more than 1.1 billion segmentation masks collected on more than 11 million licensed and privacy-preserving images, which is 4 times more masks than any existing segmentation database based on human evaluation studies. As evidenced by these human evaluations, these masks exhibit high quality and diversity compared to previous manually annotated datasets with a much smaller sample size.

Images of the SA-1B were obtained through image providers from multiple countries representing different geographic regions and income levels. Although certain geographic regions are underrepresented, SA-1B provides a greater representation due to the larger number of images and overall better coverage in all regions.

The researchers conducted tests to detect any biases in the model based on gender presentation, perceived skin tone, age range of people, as well as the perceived age of the individuals represented, and found that the SAM model performed similarly across groups. They hope this will make the job fairer when applied in real-world use cases.

While SA-1B incorporated the research findings, it can also enable other researchers to prepare foundational models for image segmentation. In addition, these data can become the basis of new datasets with additional annotations.

Meta AI researchers hope that by sharing their research and database, they can accelerate research in image segmentation and image and video understanding. Since this segmentation model can perform this function as part of larger systems.

In this article, we discussed what SAM is and its capabilities and use cases. After that we went through how it works and how it was prepared for the model review. Finally, we conclude the article with future vision and work. If you want to know more about SAM, be sure to read the paper and try the demo version.

Yusef Rafaat is a computer vision researcher and data scientist. His research focuses on the development of real-time computer vision algorithms for healthcare applications. He has also worked as a data scientist for over 3 years in marketing, finance and healthcare.

[ad_2]

Source link